Copyright ©2026 by Richard Golding

Release: 0.4-review

This book began as many presentations and short documents that I put together for different projects over the years. Those presentations covered topics from basic requirements management to good distributed system design to how to plan and operate a project that was regularly in flux. A few of the documents were retrospectives about why a project had run into trouble or failed. Others were written in an attempt to head off a problem that I could see coming.

I have worked on many projects. Most of these have been about building a complex system, or one that required high assurance—ones where safety or security are critical to their correct operation. Some have gone well, but all have had problems. Sometimes those problems led to the project failing. More often they have cost the project time and money, or resulted in a system that was not as good as it should have been. In every case the problems have required unnecessary effort and pain from the team working on the project.

This raised the question: what could be learned from these projects? How can future projects go better?

I began to sense that there were some common threads among all the education and advice I was putting together for these teams, and the problems they were having. With the help of some colleagues who were working their own challenging projects, I began to sort through these impressions in order to articulate them clearly and gather them in one place.

I have found that many of the problems I have observed have come at the intersection of systems engineering, project management, and project leadership. Building a complex system effectively requires all three of these disciplines working together. Most of the problems I have seen have arisen from a breakdown in one or more of them: where there is capable project management, for example, but poor systems engineering, or vice versa.

The intersection is about how each of these disciplines contributes to the work of building a system. The intersection is where people maintain a holistic view of the project. It is where technical decisions about system structure interact with work planning; where project leadership sets the norms for how engineers communicate and check each other’s work. It is where competing concerns like cost versus rigor get negotiated. And it is where people take a long view of the work, addressing how to prepare for the work a year or more in the future.

I’ve worked with many people who were good at one of these disciplines, but didn’t understand how their part fit together with others to create a team that could build something complex while staying happy and efficient. I have worked with well-trained systems engineers who knew the tools of their craft, but did not know how or, more importantly, why to use them and how they fit together. I have worked with project managers who had experience with scheduling and risk management and other tools of their craft, but lacked the basic understanding of what was involved in the systems part of the work they were managing. I have also worked with engineers and managers who were tasked with assembling a team, but did not understand what it means to lead a team so that it becomes well-structured and effective.

In other words, they were all good at their individual disciplines but they lacked the basic understanding of how their discipline affects work in other disciplines, and how to work with people in the other disciplines to achieve what they set out to do.

And that brings me to the basic theme of this book: that making systems is a systems problem, an integration problem. The system that is being built will be made of many pieces that, in the end, will have to work well together. This requires at least some people having a holistic, systemic view of the thing being built. The team that builds the system is itself a system, and its parts—its people, roles, and disciplines—need to work together. The team is something to be engineered and managed, and it needs people who maintain a holistic view of how its parts work together.

This book is not a book on systems engineering or project management per se. Rather, it provides an overarching structure that organizes how the systems engineering, project management, and leadership disciplines contribute to systems-building. While I reference material from these disciplines as needed, do not expect (for example) to learn the details of safety analyses here. I do discuss how those analyses fit with the other work needed for building a system, and provide some references to works by people who have specialized in those topics.

This book is for people who are building complex systems, or are learning how to do so. I provide a structure to help think about the problems of building systems, along with ways to evaluate different ways one can choose to solve problems for a specific project. I relate experience and advice where I have some.

Using this book. This book covers two topics: the system being built, and how to go about building that system. These topics are intertwined, because the point of going to the effort to build a system is to build a well-functioning system.

The first two parts of this book are meant for everyone, and to be read first. They provide a general foundation for talking about making systems. That is, they present a simplified but holistic view of making systems. They present a short set of case studies to motivate what I’m talking about (Part I). Part II presents models for thinking about systems and the making of systems at a high level, along with recommended principles for both.

The two parts that follow provide more detailed discussions of what systems are (Part III) and what systems-building is (Part IV). These parts expand on the material in Part II, providing more structure for talking about each of their subjects. These parts are meant to be read after the foundational parts, but need not be read in order or all at once.

Defining what a system is, in the abstract, intersects with the work of making a system in what I call the system artifact graph. This is the realization in one physical form or another of the abstract model of a system—how it is written down. Those artifacts are also what the work of building the system produces.

The remaining parts go into depth on concepts and tools that help with building complex systems. These include topics like project life cycles, system design, team organization, and planning. These parts use the language built up in the first parts. The later chapters are meant to be read as needed: when you find you need to know about a topic, dip into relevant chapters.

This work aims to help people understand how to do a better job of building complex systems. The strategy I use is to gather together in one place many things that people have already learned, but not necessarily understood as connected.

This strategy has good company. Many people over the years have worked to improve engineering and management practices. Many of those works have led to improved project performance and better systems—and every one of them I know about has had a down side. This work will be no exception.

I imagine the reader as I am writing this work, as if we are having a conversation. But in truth this is not conversation; whoever reads this cannot ask clarifying questions, and I cannot respond with better explanations where the writing is unclear. This leaves me to wonder: will the reader read and understand what I meant to say?

Everything I am writing is based on my own experience, whether it comes directly from projects I have worked on or from what I have learned from others. This raises, as for any work, questions about biases in viewpoint and correctness. I have tried to question my viewpoints by checking them with others and comparing my conclusions to their experience, but that will always be an imperfect approach to the truth. So there are two other questions: is what I present here correct? And will it apply to new situations that I do not now imagine?

There is yet a third worry in writing a work like this. Will it come to be treated as the truth, unquestioned? Will someone treat it as dogma?

A work like this one, which provides practical guides for doing complex projects, can prod people who already have some experience into new thinking about how they do their work, adding new perspectives or providing overviews that help them think about what they are already doing. It adds to what they already know, but does not serve as the only guide for how they work.

In the longer term, though, every work like this that I know about has come to be taken as a One True Approach, where decisions are justified by saying “that’s how it is written”—without the people involved actually understanding why that approach says what it says, and not thinking through how the guidance applies to the actual work they have in front of them.

There are two examples that illustrate this behavior.

NASA has an extensive set of processes and procedures, with extensive documentation. The NASA Systems Engineering Handbook [NASA16] is an accessible way to start exploring that process. The processes have evolved over several decades of experience in what what leads space flight missions to fail or succeed, and the procedural requirements are full of small details. People will do well to understand and generally follow those procedures for similar projects.

However, this has led to too many people within NASA and the associated space industry to follow these requirements blindly. A NASA project must go through a sequence of reviews and obtain corresponding approvals to continue (and get funding). I have watched projects treat those reviews pro forma: they need a requirements review, so they arrange a requirements review, but nobody actually builds a useful (or even consistent) set of requirements to be reviewed. A preliminary design review has a checklist of criteria to be met, so presentation slides are prepared for each point, but the reasons behind those criteria aren’t really addressed. And a little while later the project is canceled because it isn’t making good engineering progress.

Similarly, the Unified Modeling Language (UML) [OMG17] brought together the experience of many software and system engineering practitioners to create a common notation for diagramming to describe complex systems. The notations provided a common language teams could use to express the structure and behavior of systems, so that everyone on a project could understand what a diagram meant. A common notation allowed organizations to build tools to generate and analyze these drawings. While not everyone follows the notation standard exactly, the standard has improved the ability of many engineers to document and understand systems. I certainly use many elements of UML diagramming regularly.

The UML has had a corresponding downside. Engineers who first learned how to think about systems using UML have had trouble thinking in other ways. In particular, there are some aspects of system specification and design that are not fundamentally graphical—but some people who have grown up on UML find themselves uncomfortable working with tabular or record-structured information in databases (such as for requirements). I worked with one engineer who was using the SysML dialect of UML, which does not include all of the diagram types in the main UML language. He needed a kind of diagram in UML but not in SysML, but was shocked at the suggestion that he should just use the UML diagram he needed anyway because it “wasn’t part of SysML”.

Generalizing from these, the problem is that when something provides a general, comprehensive guide about how to do complex work, this thing can come to be treated as the only answer. People who only learn from that one source can end up with a constricted understanding of how to do the work.

And so I hope that those who read this work will not take what is written here as the only word on the subject. A guide is not a substitute for learning and thought. I hope that readers will take what I have gathered here as inspiration to think about the work they will encounter in making complex systems, drawing on their own experience and the experience of other around them as well as what has been written. I hope that the points in this book help people keep in mind why something is being done, so that they can address the spirit of the need in addition to the rules of any particular procedure or methodology.

Finally, this last point has caused some reviewers to raise a concern about this kind of caution. By insisting that this book doesn’t have the complete answers to anything, and that people will have to think for themselves to do good systems work, it leaves the door open for anyone to justify to themselves any choice they care to make.

This is a valid concern. I have worked with lots of people who have made bad management and engineering decisions, and who were convinced they were right. In truth everyone makes poor decisions, and everyone has limited perspective. Every single project and every single person involved in building systems will face difficult decisions and will make some of them poorly.

In the end, I have decided that this is not something one can address with a book. I do not know who will read this work in future; I cannot know or address the specific problems they will have. I cannot have a conversation with each of you to try to sort through the actual problems and decisions you encounter. All I can do is provide you with one perspective, and hope that you will add it to your own in useful ways.

A set of case studies illustrating what can go right and wrong in a project to build a system.

This book is about both what a well-built system is and how to make that happen. To begin, I’ll start with a simple story: building a small cottage model out of Lego™ bricks.

This story is made up, but it reflects some of the situations I have found in real projects I have worked on. It deliberately illustrates problems in a simplified and perhaps exaggerated way to make them clear. The simplifications include: a very small team, and one that doesn’t need to grow during the project; customer “needs” that are simple; and a project that does not need to consider real emergent properties like safety, security, or even mechanical strength.

A customer wants a small cottage model, built out of Lego™ bricks. They would like the cottage to be white. They would like it to have a window. They have a base plate they would like it to fit on.

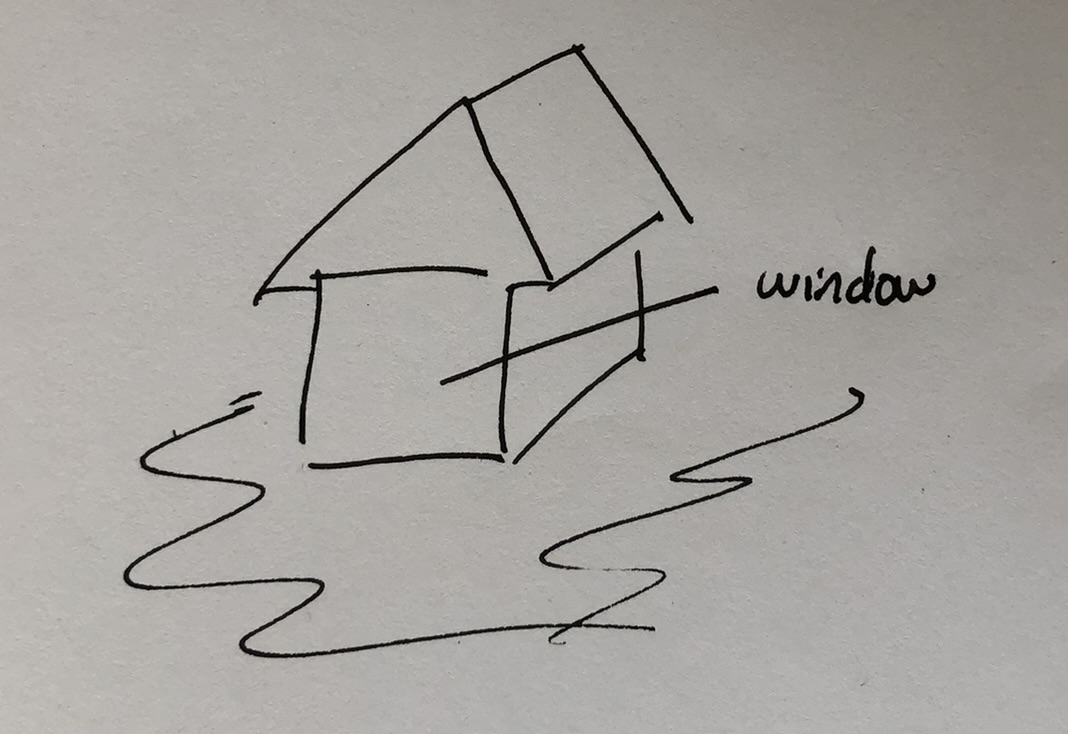

Someone on the team works with the customer to get this information and understand the needs. This results in a sketch, which the customer agrees reflects what they have asked for (Figure 3.1).

The project gets its team together, and they begin discussing how to design and build the cottage. Based on the sketch concept, they decide to split the work: one person for each of four walls, and one person for the roof.

The team discusses some basic design parameters. The decide on the length and height of each of the walls, based on the size of the base plate and the rough ratio of the sides in the sketch. They also decide which wall will get the window.

Each person on the team then begins designing and building their part, based on the sizes they have agreed on. The result is a set of five assemblies (Figure 3.2).

Right away there are some visible problems.

The team then try to integrate the assemblies together to make the cottage. The result is not good (Figure 3.3).

There are integration problems.

At this point, the team addresses some of these issues. They add roof supports to the front and back walls, and redesign all the walls to interlock at the corners.

The result is a structure that integrates all the components (Figure 3.4).

There are still problems with the integrated cottage.

The problems with the side wall come from one of the team members rushing to rebuild that wall after they were reminded that the cottage was to be all white and not have red stripes.

The missing door is a specification problem that came to light when the customer saw the completed cottage. The original sketch developed with the customer didn’t include a door—it only had an annotation about a window. People implicitly know that cottages need doors but builders may miss out on the door if it isn’t explicitly specified.

After some systems work, the team corrects the problems, fixing the side wall and adding a door. Correcting the problems involved taking the cottage more than halfway apart and rebuilding it. The result meets what the customer wanted (Figure 3.5).

There were several problems that the team encountered building the cottage.

The team did not work with the customer to develop a thorough understanding of the customer’s needs. The team only had a minimal writeup of the needs, and that writeup left an important need implicit (the need for a door).

Next, the team did not develop a concept of the system (the cottage) and check that concept with the customer. For example, the team could have made a more realistic drawing of the cottage, and talked with the customer about how the cottage would be used. Checking a concept would have probably caught the missing implicit requirement for a door.

To their credit, the team decomposed the cottage into components (walls and roof), defined some dimensional requirements each would meet, and assigned someone to design each component. Unfortunately the team did not work out and document the interfaces between components. This meant that no one looked at how the walls would be joined (interlocking or not), and no one looked at how the roof would be supported on some walls.

One of the team members building a wall did not follow requirements about color—or perhaps the color requirement was missing or unclear.

Finally, the team members did not communicate with each other. Ideally, each one would have shared abstract designs for their component with the people building components connecting to that component. Sharing these designs would likely have caught that each team member had different understandings of how their components would be joined together.

The outcome was that it took longer than it should have because there was rework that could have been avoided.

Of course, this story is simpler than building a real building would be. A real building has multiple internal component, such as electrical, plumbing, or HVAC systems, that would create many more interfaces among components. A real building has to be designed to be mechanically sound; this requires systematic analysis to ensure that the building will stay up event in unusual events like storms or earthquakes. A real building also has safety concerns, like fire safety. Finally, building a real building is regulated in most places, requiring permits, inspections, and approvals from external authorities to ensure regulatory compliance.

Some time passes, and the customer decides that they would like a larger model cottage, and they make a request to add on to the initial version. The team that built the original cottage has moved on to other projects.

A new team talks with the customer to learn what the customer wants. How much larger do they want the extended cottage to be? Should it be extended horizontally or vertically? The customer indicates that an extension adding between 50% and 100% of the original floor area would be sufficient, and the customer prefers a horizontal extension.

The team next has decisions to make about the overall design of the extension. They settle on an approach that matches the style of the original part and adds a little over half the floor area. They suggest to the customer that a window in the extension would be a good idea, and the customer agrees.

The new team does not have access to the team that made the original design decisions. They have to reverse engineer the design approach used by examining the cottage as built.

The original cottage was located toward the back of the base plate, and the team has decided that the extension should be at the back of the original. This implies that the team will have to move the original cottage forward. The team examine the original structure and determine that it can be moved on the base plate without problems.

The new team works together to design and build the extension. They have learned about the problems that the original team had, and so they manage the interfaces between walls and with the roof better. However, they don’t have access to the decisions that the original team made about interlocking the walls for strength, and so they build the extension as a separate unit.

This illustrates a common scenario: that changes are made to a system long after it was originally built. The changes can be complex projects on their own. The original team may be long gone, or they may no longer remember details that were not written down. Knowing the design decisions and their rationales for the decisions affects how the changes are designed.

The changes not just add features (new space), but add interfaces between new parts and the original, and can change the interfaces within the original.

The team that build the original cottage did not document the design decisions they made. The team building the addition had to reverse engineer the design from the built cottage. The lack of information about the rationale for how walls were connected led to a different, less structurally sound approach for connecting the addition to the original structure.

The project to build the addition took longer than it could have if the team had not had to reverse engineer the design. The lack of design rational led to a structural solution that is sufficient for plastic bricks but would not work in a real structure.

In this story, the new team did learn from the original experience that they should do systems-level work. They worked through the interfaces between new parts, and this led the new project to go more smoothly than the original. The lesson is that learning over time matters.

Once again, this story is a simplification of a real building project. A real building would have far more interfaces: electrical circuits and plumbing might need to be extended. The structure of a real extension would have to be integrated into the original structure.

This story did not show the value of designing the original to be expanded. In the example, the original cottage could have been placed forward on the base plate so there was space for a later addition. In a real building, by analogy, designing the electrical main panel to have space for additional circuits and enough capacity to add more usage would make an addition easier.

As I present these stories, I will link them to the principles in Chapter 8 that can provide solutions.

Project leadership. Some of the problems in this story relate to how the cottage-building project was led. The most relevant principle is Section 8.1.3—Principle: Systems view of the system. The original team’s work would have gone more smoothly if they had had someone responsible for ensuring that the system made sense as a system.

System-building tasks. Some of the problems related to how the original team went about its work—which resulted in problems with the final system product.

The team. This story does not illustrate many problems with the team itself. However, the team building the original cottage built each of the components in isolation, and did not discover that their parts would not integrate until the parts had been built.

This chapter presents some case studies of how people have built complex systems.

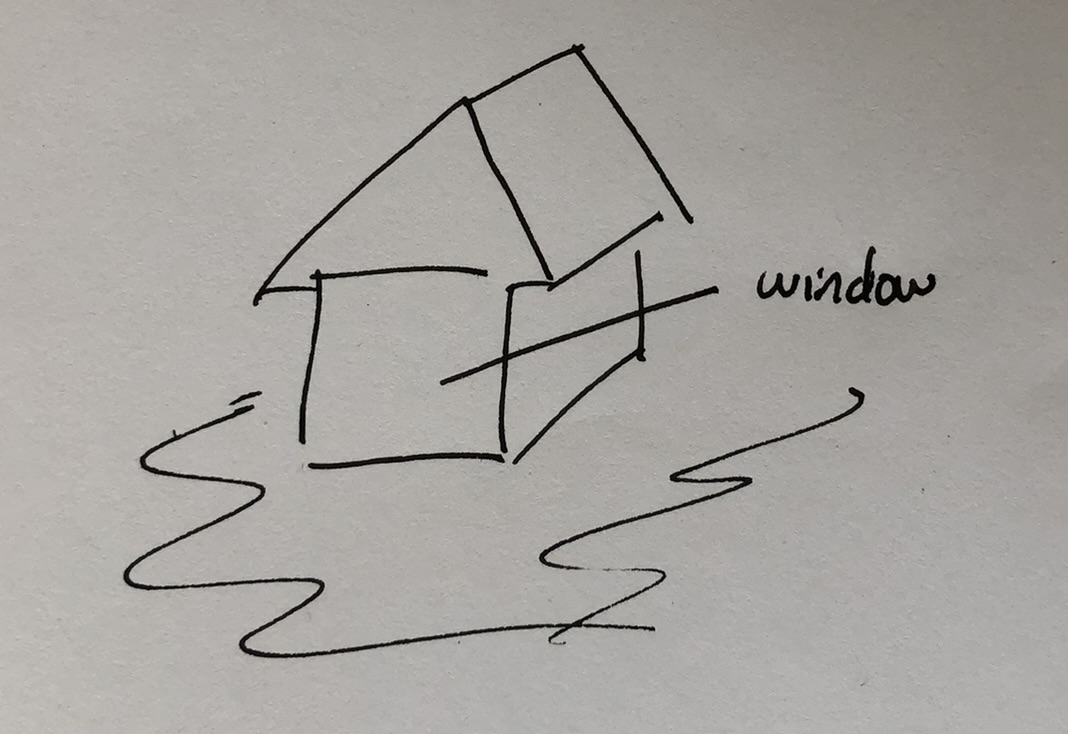

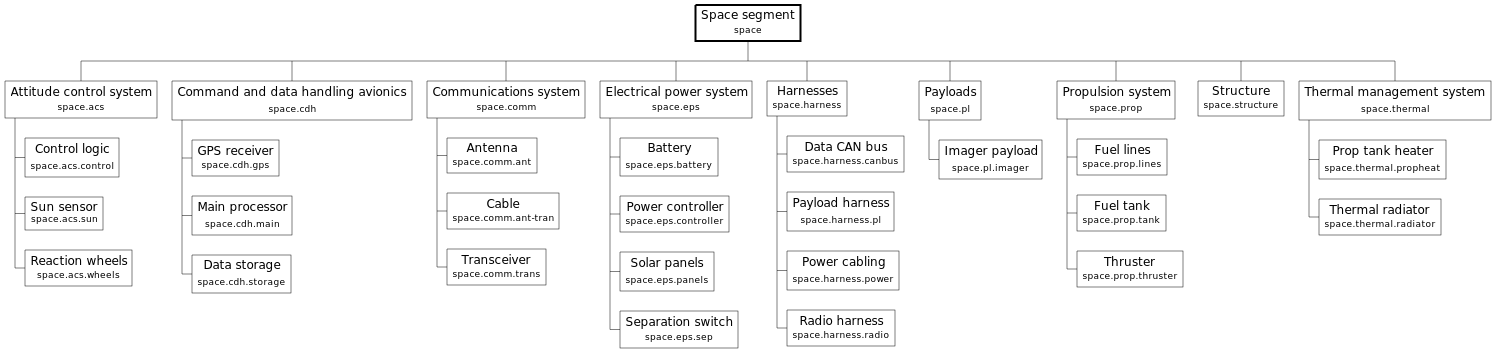

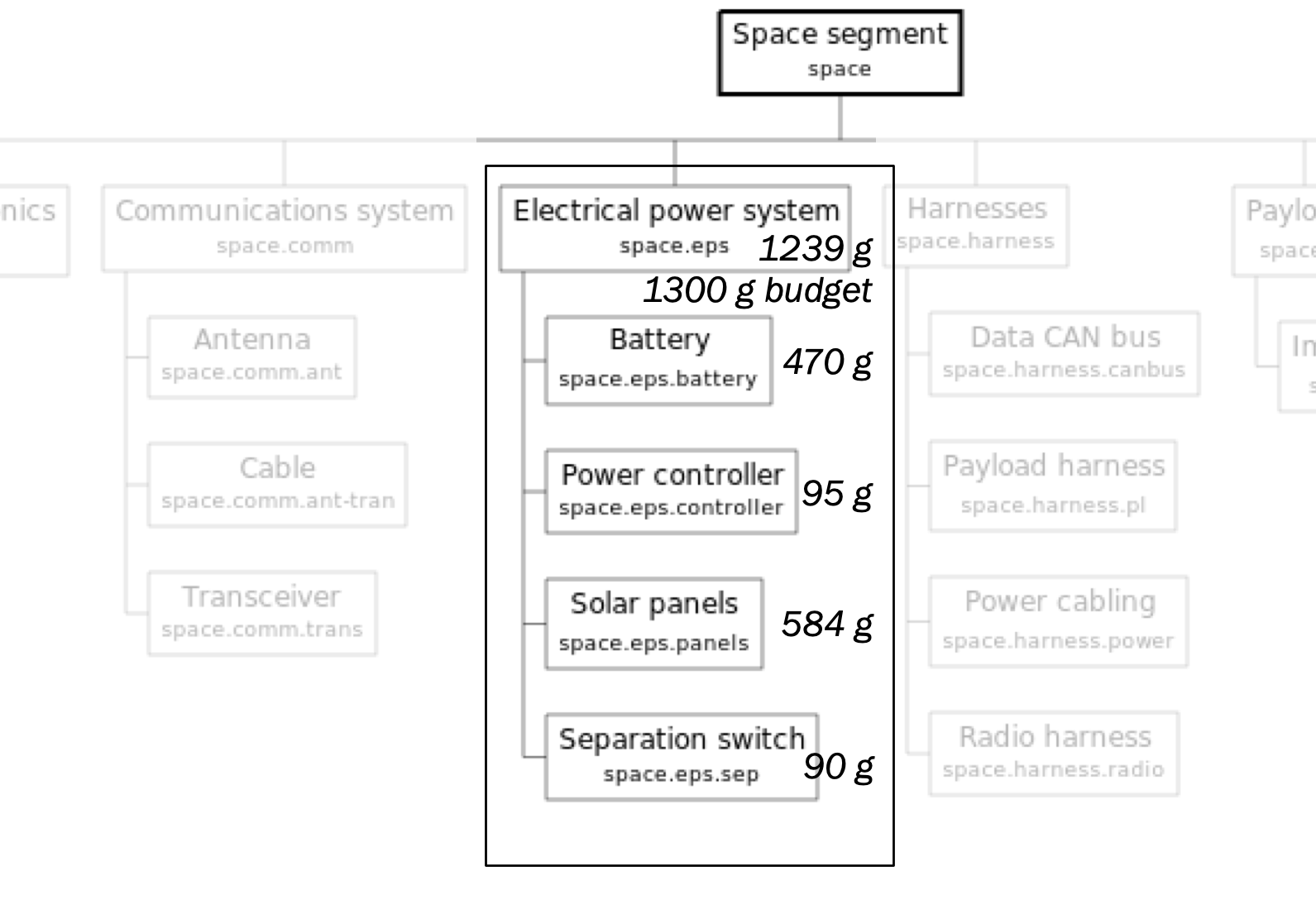

The project. I worked on a NASA small spacecraft project. The project’s objective was to fly a technology demonstration mission to show how a large number of small, simple spacecraft could perform science missions. The mission objectives were to demonstrate performing coordinated science operations on multiple spacecraft, and to demonstrate that the collection of spacecraft could be operated by communicating between one spacecraft and ground systems, and the spacecraft then cross-linking commands and data to perform the science operations.

The problem. The mission had one set of explicit, written mission objectives to perform the technology demonstration. It also had a number of implicit, unwritten constraints placed on it, primarily to re-use particular spacecraft hardware and software designs.

Those two sets of objectives resulted in conflicts that made the mission infeasible. There were three key technical problems: power consumption was far in excess of what the spacecraft’s solar panels could generate; the legacy that could not communicate effectively over the distances involved; and the design had insufficient computing capability to accurately compute how to point spacecraft for cross-link communication.

Conflicts like these are not uncommon when first formulating a system-building project, and NASA processes are structured to catch and resolve them. The NASA Procedural Requirements (NPRs), a set of several volumes of required processes, require projects to formalize mission objectives and analyze whether a potential mission design is feasible. This work is checked at multiple formal reviews, most importantly the Preliminary Design Review (PDR).

At the PDR, expected project maturity is:

This project, however, failed at three of the necessary steps. First, the project did not perform top-down systems engineering, such as a proper documentation of mission objectives, a concept of operations, and a refinement of those into system-level and subsystem-level specifications. In particular the implicit and undocumented constraints were never documented as requirements; they were tacitly understood by the team and rarely analyzed. Those requirements that were gathered were developed by subsystem leads, and they were inconsistent and did not derive from the mission objectives. Second, individual team members did analyses that showed problems with the the ability of the radios, their antennas, and the ability to point the spacecraft in such a way that cross-link communications would work. The people involved repeatedly tried to find a solution in their individual domain of expertise to fix the problem, and the problems were never raised up to be addressed as a systemic problem. Finally, the PDR was the final check where these problems should have been brought to light as the refinement of mission objectives and the concept of operations would fail to show communication working. Instead, the team focused on making the review look good rather than addressing the purpose of the review.

Outcome. The project proceeded to build the hardware for multiple spacecraft, began developing the ground systems and developing the flight software. After several months, the project neared the end of its budget, and the spacecraft design was canceled. Something like two years’ worth of investment was lost, and the capability of performing a multi-spacecraft science mission was never demonstrated.

The agency later found some funds to develop a much simplified version of the flight software and relaxed the mission objectives substantially to only performing some minimal cross-link communications. A version of that mission was eventually flown.

Solutions. The project made four mistakes. Each one of them could have been corrected if the project had followed good practice and NASA required procedures.

First, the conflicting mission objectives and constraints should have been resolved early in the project. NASA has a formal sequence of tasks for defining a mission and its objectives, leading to a mission definition that is approved and signed by the mission’s funder. If the project had followed procedure, the implicit constraints would have been recorded as a part of this document. Documentation would have encouraged evaluation of the effects of those constraints.

Second, the project did not do normal systems engineering work. The systems engineering team should have documented the mission objectives, developed a concept of operations for the mission, and performed a top-down decomposition and refinement of the mission systems. In doing so, problems with conflicting objectives would have been apparent. The systems leadership would have been involved in analyses of the concept, and thus been aware of where there were problems.

Third, the team lacked effective communication channels that would have helped someone working one individual problem raise the issues they were finding up to systems and project leadership, so that the problems could be addressed as systems issues. For example, one person found that the flight computer would not be able to perform good-enough orbit propagation of multiple spacecraft so that one spacecraft would know how to point its antenna to communicate with another. A different person found problems with the ability of the radios to communicate at the ranges (and relative speeds) involved.

Finally, the PDR should have been the safety net to find problems and lead to their resolution. The NASA procedural requirements have a long list of the products to be ready at the PDR. (See [NPR7123, Table G-6, p. 111] and [NPR7120, Appendix I].) The team took a checklist approach to these several products, putting together presentations for each topic in a way that highlighted progress in the individual topics but failing to address the underlying purpose: showing that there was a workable system design.

Had any of these mechanisms worked, the systems and project leadership would have detected that the conflicting mission objectives were infeasible and led the project to negotiate a solution.

Principles. This example is related to several principles for a well-functioning project.

The project. I worked at a startup company that was building a high-performance, scalable storage system. The ideas behind the system came from a university research project, which had developed a collection of technology components for secure, distributed storage systems.

The company had developed several proof-of-concept components and was transitioning into a phase where it was getting funding and establishing who its customers were. The company hired a small marketing team to work out what potential customers needed and to begin building awareness of the value that the new technology could bring.

The problem. The marketing team had experience with computer systems, but not with storage in particular. They could identify potential market segments, but they did not have the background needed to talk with potential customers about their specific needs.

The engineering team were similarly not trained at marketing. Some of the team members had, however, worked at companies that used large data storage systems and so had experience at being part of similar organizations.

Solutions. The marketing team set up a collaboration with some of the technical leads. This collaboration left each team in charge of their respective domains, with the technical leads helping the marketing team do their work and the marketing team providing guidance about customer needs to the engineering team.

One of the technical leads acted as a translator between the marketing and engineering teams, so that information flowed to each team in terms they understood. Technical leads joined the marketing team on customer visits, helping to translate between the customers‘ technical staff and the marketing team. The marketing team conducted focus group meetings, and some of the technical leads joined in the back room to help frame follow-up questions to the focus groups and to help interpret the results.

Outcome. The collaboration helped both teams. The marketing team got the technical support and education they needed. The engineering team got proper understanding of what customers needed, so that the system was aimed at actual customer needs.

Principles. This example is related to the following principles:

The project. This occurred at the startup I worked at that was building a scalable storage system.

The problem. The team had a focus on making the system highly available, to the point where we had an extensive infrastructure for monitoring input power to servers and providing backup power to each server. If the server room lost mains power, our servers would continue on for several minutes so that any data could be saved and the system would be ready for a clean restart when power came back on. We did a good job meeting that objective.

What we forgot is that people sometimes want to turn a system off. Sometimes there is an emergency, like a fire in a server room, and people want the system powered off right away. Sometimes preventing the destruction of the equipment is more important that losing a few minutes’ worth of data. We had no power switches in the system and no way to quickly power it down.

Outcome. In practice this wasn’t too serious a problem because emergencies don’t happen often, but it meant that the system couldn’t pass certain safety certifications.

Solutions. We made two mistakes that led to the problem.

The first mistake was that everyone on the team saw high availability as a key differentiator for the product, and so everyone put effort into it. This created a blind spot in how we thought about necessary features.

The second mistake was that we did not work through all of the use cases for the system and so implicit features, including power off. Building up a thorough list of use cases can serve as a way to catch blind spots like this, but the team did not build such a list.

Principles. This is related to one principle:

The project. I consulted on a project to build a technology demonstration of a constellation of LEO spacecraft for the US DOD. This constellation was to perform persistent, world-wide observations using a number of different sensors. It was expected to operate autonomously for extended periods, with users world wide making requests for different kinds of operations. The constellation was expected to be extensible, with new kinds of software and spacecraft of new capabilities being added to the constellation over time.

One company organized the effort as the prime contractor. That company built a group of other companies of various sizes and capabilities as subcontractors. The team won a contract to develop the first parts of the system.

The problem. The constellation had to be able to autonomously schedule how its sensors would be used, and where major data processing activities would be done. For example, someone could send up a request for an image of a particular geographic region, to be taken as soon as possible. The spacecraft would then determine which available spacecraft would be passing over that region soon. Some of the applications required multiple spacecraft to cooperate: taking images from different angles at the same time, or persistently monitoring some region, handing off monitoring from one spacecraft to another over time, and performing real-time analysis on the images gathered on those spacecraft.

The prime contractor selected its team of other companies and wrote the contract proposal for the system before doing systems engineering work. This meant that neither a detailed concept for the system’s operation nor a high-level design had been done.

After the contract was awarded, the team had to rapidly produce a system design. This effort went poorly at first because the system’s concept had not been worked out, and different companies on the team had different understandings of how the system would be designed. The team had to deliver initial system concept of operations and requirements quickly after the contract was awarded. The requirements were developed by asking someone associated with each expected subsystem to write some requirements. Needless to say, the concept, high-level design, and requirements were all internally inconsistent.

After the team brought me on to help sort out part of the design problems, we began to do a top-down system design and establish real specifications for the components of the system. We were able to begin to work out general requirements for the autonomous scheduling components.

The project team had determined that they needed to use off-the-shelf software components as much as possible, because the project had a short deadline. One of the subcontractor companies was invited onto the team because they had been developing an autonomous spacecraft scheduling software product, and so the contract proposal was written to use that product.

However, as we began to work out the actual requirements for scheduling, it became apparent that the off-the-shelf scheduling product did not match the project’s requirements. The requirements indicated, for example, that the system needed to be able to schedule multiple spacecraft jointly; the product only handled scheduling each spacecraft independently. The system also had requirements for extensibility, adding new kinds of sensors, new kinds of observations, and new kinds of data processing over time. This suggested that strong modularity was needed to make extensibility safe, but the off-the-shelf product was not at all modular.

Outcome. The mismatch between the decision to use the off-the-shelf scheduling product and the system’s requirements led to both technical and contractual problems.

The technical problem was that the scheduling product could not be modified to work differently and thus meet the system requirements. The project did not have the budget, people, or time to do detailed design of a new scheduling package that would meet the need.

The contractual problem was that the subcontractor had joined the project specifically because they saw a market for their product and were expecting to use the mission to get flight heritage for it. When it became clear that their product did not do what the system needed, they discussed withdrawing from the project.

In the end, the customer decided not to continue the contract and the project was shut down.

Solutions. This project made three mistakes that, had they been avoided, could have changed the project’s outcome.

First, the team did not do the work of early stage systems engineering to work out a viable concept and high-level design before committing to partners and contracts. This would have made it clear what was needed of different system components. It would also have provided a sounder basis for the timelines and costs in their contract proposal.

Second, the team made design and implementation choices for some system components without understanding the purpose that those components needed to fill.

Finally, the team made commitments to using off-the-shelf designs without determining whether those designs would work for the system.

Principles. The solutions above are related to the following principles:

The project. I consulted for a company that was working to build an autonomous driving system that could be retrofitted into certain existing road vehicles.

The company had started with veterans from a few other autonomous driving companies. They began their work by prototyping key parts of a self-driving system, to prove that they had a viable approach to solving what they saw as the key problems. This resulted in a vehicle that could perform some basic driving operations, though it was always tested with a safety driver on board.

The team focused only on what they saw as the most important problems in an autonomous driving system. They believed that it was important to demonstrate a few basic self-driving functions as rapidly as possible—in part because they believed that this would help them get funding, and in part because they believe that this would help them forge partnerships with other companies. They focused on a simplified set of capabilities, including sensing, guidance, and actuation mechanisms for driving on a road.

The problem. This focus meant that the team developed a culture, along with a few somewhat documented processes, that was focused on building a prototype-style product, even as they began to fit their system into multiple vehicles and test them on the road (with safety drivers). When they found a usage situation in their testing that their driving system did not handle as they felt it should, they added features to handle that situation to the sensing and guidance components and to simulation tests they used on those components. In other words, the engineering work was driven largely reactively.

The team did not spend effort on analyzing whether the new features would interact correctly with existing features, relying on simulation testing to catch regressions. They did not develop a plan for features that they would need, and for how they would integrate other systems with the core functions they had already prototyped.

Some of the team members had some awareness that they needed to improve the safety of the driving system and the rigor with which the team designed and built the system. These team members, some of whom were individual engineers and some who were leaders, tried from time to time to define some basic individual processes—like defining requirements before design, or conducting design reviews. Their goal was always to move the team incrementally toward sound engineering practice.

None of these attempts worked: each time, a few people would try a new procedure, task, or tool, but a critical mass of the team would keep working the way they had been in order to keep adding new features in response to immediate needs.

Outcome. After nearly two years, the team had not changed its practices and continued to work as if they were building a prototype. The team in general did not define or work to requirements; they did not analyze the systems implications of potential new features before implementing them. The team was making little progress on developing a safety case for the system.

Solutions. The fundamental problem was a misalignment between the incentives that drove the team in the short term and long-term practices needed to build a safe and reliable system.

The team as a whole, from the leadership down, developed habits focused on developing a proof of concept that would let the company get additional funding, as well as attract good staff and help the company build partnerships. This was the right choice for the company in its early days, because a company that cannot get funding does not get to move on to the long term. This short-term focus drove the habits and culture of the early company.

Later, as the company got funding and built up a team to build the system, they needed to change their practices. Changing a team’s culture and habits is hard: the team’s practices have been working out initially. The team’s habit of focusing on short-term results, in particular, defined how they organized all their work.

In order to change practices to be a company building a product that is viable in the long term, teams like this make a deliberate change to their culture, habits, and practices. A disruptive change like this does not happen spontaneously: a team’s culture defines the stable environment in which people can do what they understand to be good work. This creates a disincentive to make a change that disrupts how everyone works together.

Deliberate and pervasive changes come from the team’s leadership. The leadership must first recognize that a change is needed and work out a plan for what to change, how quickly, and in what way. The leadership then have to explain the changes needed, create incentives that will overcome the disincentives to change, and hold people on the team accountable for making the changes.

Principles. This case reflects some more of the principles outlined in Chapter 8.

The project. A colleague was an engineer working on an electronics-related subsystem at a large New Space company that was building a new launch vehicle.

The team in question was responsible for designing one of the avionics-related subsystems and acquiring or building the components. This required finding suppliers for some components and ordering the necessary parts.

The problem. The company had processes in place for both vendor qualification and parts ordering. They included centralized software tools to organize the workflow.

The vendor qualification process began with submitting a request into the tools. The request was then reviewed by a supplier management team; once they approved a supplier, the avionics team could start placing ordering requests to buy parts. The purchase request would similarly be routed to an acquisition team that would make the actual purchase from the supplier.

The intents of this process were, first, to take the work of qualifying potential vendors and managing purchases off the engineering team, and second, to ensure that the vendors were actually qualified and that parts orders were done correctly.

From the point of view of the engineers building the avionics, the processes were opaque and slow. They would put in a request, and not know if they had done so properly. Responses took a long time to come back. At one point, my colleague reverse engineered the vendor qualification process in order to figure out how to use it; the result was a revelation to other engineers.

It also appeared that the positions responsible for processing these requests were understaffed for the workload. In practice these people did not have the time to do proper reviews of the vendors most of the time.

Outcome. Having supply chain processes was a good thing: if it worked, it increased the likelihood that the acquired parts would meet performance and reliability requirements, that the vendors would deliver on schedule and cost, and that the cost of acquiring parts remained within budget.

However, getting vendors qualified to supply components and then getting the components took a long time, delaying the system’s implementation and then delaying testing and integration.

The suppliers and the parts did not get the intended scrutiny, which may have let problem suppliers or parts through.

The company acquired a reputation with its employees of being slow and difficult to work for.

Solutions. There are four things that could have been done to make these processes work as intended.

First, the processes should be documented in a way that everyone involved knows how the process works. In this situation, it seems that people playing different parts in the process knew something about their part, but they did not understand the whole process; if there was documentation, the people involved did not find it. The process documentation should inform all the people involved what all of the steps are, so they understand the work. It should make clear the intent of the process. It should also make clear what would make a request successful or not.

Second, the processes should be evaluated to ensure that every step adds value to the project, compared to not doing that step or doing the process another way.

Third, the supporting roles—in this case, those tasked with reviewing and approving requests—should be staffed at a level that allows them to meet demand.

Finally, the project should regularly check whether its processes are working well, and work out how to adjust when they are not working.

Principles. The following principles apply:

The project. The first transcontinental railroad to cross North America was built between 1862 and 1869 [Bain99]. It involved two companies building the first rail route across the Rocky Mountains and the Sierra Nevada, one starting in the west and the other in the east. It was built with US government assistance in the form of land grants and bonds; the government set technical and performance standards that had to be met in order to get tranches of the assistance. The technical requirements included worst-case allowable grades and curvature. The performance requirements included establishing regular freight and passenger service to certain locations by given dates.

The problem. The companies building the railroad had limited capital available to build the system. They had enough to get started, but continuing to build depended on receiving government assistance and selling stock. Government assistance came once a new section of continuous railroad was accepted and placed into service. In addition, the two companies were in competition to build as much of the line as possible, since the amount of later income depended on how much each built.

This situation meant that the companies had to begin building their line before they could survey (that is, design) the entire route. They operated at some risk that they would build along a route that would lead to someplace in the mountains where the route was uneconomical—perhaps because of slopes, or necessary tunneling, or expensive bridges.

Because the building began before the route was finalized, the companies could not estimate the time and resources needed for construction beyond some rough guesses. The companies worked out a general bound on cost per mile before the work started, and government compensation was based on that bound. In practice the estimate was extravagantly generous for some parts of the work.

Solutions. The initial design risk was limited because there were known wagon routes. People had been traveling across the Great Plains and the mountains in wagons for several years. While the final route did not exactly follow the wagon routes, the early explorations ensured that there was some feasible route possible.

The companies built their lines in four phases: scouting, surveying, grading, and track-laying. (In some cases they built the minimal acceptable line with the expectation that the tracks would be upgraded in the future once there was steady income.) Scouting defined the general route, looking for ways around bottlenecks like canyons, rivers, or places where bridges or tunnels would be needed. Surveying then defined the specific route, putting stakes in the ground. The surveyed route was checked to ensure it met quality metrics, such as grade and curvature limits. After that, grading crews leveled the ground, dug cuts through hills, and tunneled where necessary. Finally, track-laying crews built bridges and culverts where needed, then laid down ballast, ties, and rail. After these phases, a section of track was ready for initial use.

Scouting ran far ahead of the other phases, sometimes up to a year ahead. Survey crews kept weeks or months ahead of grading crews. The grading and track-laying crews proceeded as fast as they could. All this work was subject to the weather: in many areas, work could not proceed during winter snows.

Outcome. The transcontinental railroad was successfully built, which opened up the first direct rail links from one coast of North America to the other. The early risk reduction—through knowledge of wagon routes—accurately showed that the project was feasible.

The companies were able to open up new sections of the line quickly enough to keep the construction funded. The companies received bonds and land grants quickly enough, and revenue began to arrive.

The approach of scouting and surveying worked. The scouting crews investigated several possible routes and found an acceptable one. While there were instances of tentatively selecting one route then changing for another—sometimes for internal political reasons rather than technical or economic reasons—no section of the route was changed after grading started. In later decades other routes were built, generally using tunneling technology that was not available for the first line. Many parts of the original line are still in regular use.

Principles. The transcontinental railroad project was an example of planning a project at multiple horizons, when the work of implementing begins before the design is complete, and where the plan and design is continuously refined.

Foundational definitions used throughout the rest of this book, including:

This book is about the work involved in making a system—what a system is, and how to do a good job making one.

Part I presented a set of case studies that showed how system-building project can go well—or not. This leads to two questions: How does one build a system well? And how does one avoid the problems?

To start finding answers to these questions, consider three aspects of making a system: what a system is; the activities involved in making it; and the people who do the activities that make the system.

A system. A system is “a regularly interacting or interdependent group of items forming a unified whole”.[1] Other definitions speak to a set of items or components that work together to fulfill a purpose.

This definition includes some of the key aspects of a system.

For artificially built systems, the system is the outcome of all the work that people do to make the system.

Having a purpose distinguishes a system built by people from systems in nature. A natural system often just exists, and any meaning or purpose to it assigned after the fact by people. A human-built system, on the other hand, does something for someone. The purpose of human-built systems can be described in terms of what it does for someone, and why it is worth the effort to make a system do that.

Most systems are not static: they will evolve rapidly as they proceed from concept through design; once it is in operation, they will continue to evolve as their users’ needs evolve.

The next chapter, Chapter 6, discusses more about what a system is.

Making a system. The work of making a system can be seen as a string of activities, the life cycle of the system. It begins with an idea. That idea might be a user’s need, or it might be an idea for a new way to do something that might fill an as-yet-unidentified user’s need. The work proceeds to translate that idea into designs and then into a working system. This work goes through a number of steps, such as developing a concept, specifying its pieces, designing and implementing them, integrating the parts and verifying the assembly. Once a system has been built, it can be placed into operation. A system that has been in operation may change over time: users’ needs change, or technology changes. Eventually, every system is retired and disposed of.

All these activities are done by a team of people who are building the system, and the point of spending the effort is for the system, at when built and in operation, filling its purpose.

Chapter 7 discusses more about how to make a system.

Who does the work. A team of people working together does all the activities involved in making the system. For complex systems, the team can get large and may involve people at different companies and with different skills.

The team of people is itself a system: a set of people, whose purpose is to build the objective system, who interact with each other through discussion, documentation, and artifacts. A team that is functioning well is able to focus their efforts on the purpose of the system they are building. The team is organized so that its members have information they need each to do their part, and to communicate so that the pieces of the system that they create work together.

Key roles. A team that functions well, like any human-built system, does not happen by accident; it happens because someone takes the effort to design and implement it so that it works well.

In practice, there are three roles that do this work of organizing and running the team. These roles may be divided among team members in many different ways, but every team building a complex system needs the three roles filled somehow. The roles are:

The intersections. Having teased apart the ideas of system, system-building, and people, and the ideas of systems engineering, project management, and project leadership, the next step is to acknowledge that none of these things are in fact separate.

The objective of a project is to produce a system. The way to produce it is to do system-building work. The people in a team do that work. All three must fit together: the way that the work gets done determines whether the resulting system meets its purpose. How the team is organized, its culture and habits, govern how the people will do the work.

While systems engineering, project management, and project leadership are different roles and involve different skills, they work together. Leadership by itself gets nothing done; that comes from engineering and management. Leadership and management without systems engineering might produce a system but it probably won’t work. Leadership and engineering without management usually means a lot of engineers running around doing cool things but also wasting time and resources and not actually getting things done. Management and engineering without leadership isn’t able to make decisions or take responsibility.

The people filling each of these three roles also need to understand their counterparts’ roles. A systems engineer who designs something that would require more time or resources than the project has is not going to be effective. A project leader who does not understand the work the team does is not going to model good work practices. A project manager who does not understand the engineering is not going build a plan or schedule that makes sense.

Systems work, in the end, is about doing work that makes a coherent whole out of the parts it has to work with. The work of making a system is just as much systems effort as its product is. Only when the parts fit together does the work get done as it should.

Working with systems is about working with the whole of a thing. It is a bit ironic that to make the whole accessible to rational design, we need to talk about the parts that make up systems work.

That is one of the first points about systems. Most systems are too complex for a human mind to remember and understand as a whole at one time. To work on these systems, people must find ways to abstract and to subset the problem. This book discusses some of the techniques for slicing a system into understandable parts, along with ways to use those techniques and why to use them. In the end, however, everything in here deals with carefully-chosen subsets of a system.

This chapter covers some of the essential concepts and building blocks that are the foundation for the techniques discussed in the rest of this book.

The subjects for systems work can be divided into five groups:

The first four subjects are connected by a reductive approach to explaining complex systems, in which the high-level purpose is explained by reducing it to simpler constituent parts and structure, and conversely expressing the purpose as emergent from these simpler parts. The final subject is about ensuring that the system does what it is supposed to do (and only that).

Every system that is designed and built has a purpose. That is, someone has an expectation of the benefits that will come from building the system, and they believe that those benefits will outweigh the costs (in resources, time, or opportunities) that will be incurred building the system.

Every system must be designed and built to address its purpose, and no other purposes, at the lowest cost practically achievable. This point may seem uncontroversial on its surface, but I have observed that the majority of projects fail to work to this standard, and incur unnecessary costs, schedule slips, or missed customer opportunities. Every design choice must be weighed according to how well each option helps satisfy the purpose or not; if an option does not, it should not be chosen.

Making design decisions guided by a system’s purpose means that the team must understand what that purpose is. The purpose must be recorded in a way that all the team members can learn about it. It also needs to be accurate: based on the best information available about what the system’s users need, and as complete as can be achieved at the time. The record of the purpose should avoid leaving important parts implicit, expecting that people will know that systems of a particular kind should (for example) meet certain safety or profitability objectives; people who specialize in one area will know some of these implicit needs but not others. The purpose documentation should also include secondary objectives, such as meeting regulatory requirements or leaving space in the design for anticipated market changes.

The understanding of a system’s purpose and costs will shift over time, both as the world changes and as people learn more accurately what the system’s value or cost will be. When the idea for the system Is first conceived, the purpose may be accurate for that time but the understanding of the cost is likely to be rough. As design and development progress, the understanding of cost improves, but the needs may change or a customer may realize they misunderstood some part of the value proposition.

A system’s purpose also changes over longer periods of time. People add new features to an existing product to expand the market segment to which it applies or to help it compete against similar products. The technology available for implementing a system changes, creating opportunities for a faster, cheaper, or otherwise better system.

Systems leadership have to balance the needs for a clear and complete statement of a system’s purpose with the fact that the understanding of purpose will change over time. The agile [Agile] and spiral [Spiral] management methodologies arose from this need for balance between opposing needs. Chapter 22 addresses how systems engineering methodologies can help address this need.

Working in a way that is driven by system purpose requires discipline in the team and its leadership. Many junior- and mid-level engineers are excited about their specialist discipline, and want to get to designing and building as quickly as possible—after all, those are the activities they find fulfilling. I have observed team after team proceed to start building parts of a system that they are sure will be the right thing, without spending the effort to determine whether those parts are actually the right ones. Those design decisions may end up being correct many times, which leads to a false confidence in decisions taken this way (“I’m experienced; I’m almost always right!”). The flaw is that the wrong decisions can have a high cost, high enough to outweigh any benefit from the rapid, unstudied decision.

I have heard many teams say—rightly—that they need to make some design decision quickly, see whether it works, and then adjust the design based on what they learn. This line of reasoning is both a good idea and dangerous. If a team actually does the later steps of evaluating, learning from, and changing the design then this approach can result in good system design. (This is discussed more in later Chapter 44 on prototyping and Chapter 65 on uncertainty.) However, most teams lack the leadership discipline to perform to this plan: once there is some design in place, pressures to keep moving forward drive teams to live with the bad initial design and accept complexity and errors. It requires discipline and commitment from the highest levels of an organization to take the time needed to learn from an early design and change what they are doing. The leadership must be prepared to push back against pressures to just live with a poor design and instead to require their team to take the time to learn and adjust, and to be clear with external parties, such as investors, that the plan is a necessary and positive way to realize a good product.

A system has a boundary that defines what is within the system and what is not. What the system does (its functions) and what it uses to do them (its components) are within the system.

The rest of the world is outside the system. The outside world includes the system’s environment: the part of the world with which the system interacts.

The boundary defines the interface between the system and its environment.

What is inside the system and where the boundary lies are within the control of the project building the system. The project must adapt its work to everything else outside the system boundary.

Systems are designed and built by people. The methods used to build them must account for two human issues. First, most systems today are too complex for one person to keep in mind all the parts at one time, leading to a need to work with subsets of the system at any given time. Second, most systems also require multiple people to design or build, either because of specialties or the total amount of work involved. This leads to the need to break the work up into parts for different people to work on.

There are two techniques used to address this need. First, systems are divided into component parts, typically in a hierarchical relationship: the system is divided into subsystems, which are in turn subdivided, until they reach component parts that are simple enough not to require further subdivision. Second, people approach the system through narrow views, each of which covers one aspect of the system but across multiple component parts—such as an electrical power view, an aerodynamics view, or a data communications view.

Dividing the system into component parts creates pieces that are small enough to reason about or work on in themselves. The description of the part must include its interfaces to other parts, so that the design or implementation can account for how it must behave in relation to other parts. However, the interface definitions abstract away the details of other parts, so that the person can concentrate their attention on just the one part.

Dividing up the system also allows different people to work on different parts, as long as both parts honor the interfaces between them. The division into parts, and the definition of interfaces, create divisions of responsibility and scope for communication for the different people. This is addressed further in the Teams section (Section 7.3.3).

The hierarchical breakdown of the system into components and subcomponents provides a way to identify all of the parts that make up the system, ensuring that all can be enumerated. It also defines a boundary to the system: the system is made up of the named parts, and no others.

Reasoning about views of a system provides a similar and complementary way of managing the complexity of reasoning about a system by focusing on one aspect across multiple parts, and abstracting away the other aspects. This allows different people to address different aspects, as long as the aspects do not interact too much. For example, specialist knowledge, such as about electrical system design, can be brought to bear without the same person needing to understand the aerodynamics of the aircraft in which the electronics will operate.

Decomposing a system into component parts is one part of the system’s design; the other part is how those components relate to each other. The relations between parts define the structure of the system. These relations include all the ways that components can interact with each other, at different levels of abstraction. At low levels, this might be interatomic forces at the molecular level; at medium levels, mechanical, RF, force, or energy transfers; at higher levels, information exchange, redundancy, or control.

The structure needs to lead to the system’s desired aggregate properties, such as performance, safety, reliability, or specific system functions like moving along the desired path or providing reliable electrical service.

The aggregate properties are emergent, and arise from the way the structure combines the properties of individual components.[1] The structure must be designed so that the system has the desired emergent properties and avoids undesired ones. For example, a simple reliable system has a reliability property that arises from the combination of two or more components that can perform the same function, along with the interaction patterns of each component receiving the same inputs, each component generating consistent outputs, how the two or more results are combined, and how each component responds to failure.

The structure must be designed to avoid unanticipated emergent properties, especially when those properties are undesirable. In a safe or secure system, for example, it is necessary to show that the system cannot be pushed into some state where it will perform an unsafe action or provide access to someone unauthorized. Avoiding unanticipated emergent properties is one of the hardest parts of correctly designing a complex system.

The structure must be well-designed for the system to meet its purpose, and for people to be able to understand, build, and modify it. In particular the structure needs to be:

There are good engineering practices that should be followed to achieve these aims, as I discuss in Chapters 43, 49, and 55.

Finally, the structure determines the interfaces that each component part must meet. Those interfaces in turn determine a component’s functions and capabilities, which guide the people working on the component, as discussed in the previous section.

It is not enough to design and build the system; the team must also show that the system meets its purpose.

The team developing or maintaining the system must be able to show that the system complies with its purpose to customers, who need to know that the system will do what they expect; to investors, who need evidence that their investment is being used to create what they agreed to fund; and to regulators, especially for safety- or security-critical systems, who are charged with ensuring that systems function within the law.

The team also needs to ensure that pieces of the system meet the system’s purpose as they are developing or modifying those pieces. They must be able to judge alternative designs against how well they meet the purpose, and once built they must be able to check that the result conforms to purpose.

The process of showing that a system or a component part fulfills its purpose involves gathering evidence for and against that proposition, and combining the evidence in an argument to reach an overall conclusion about compliance. There are many kinds of evidence that can be gathered: results of testing, results of analyses, results of expert analysis, or results from performing a demonstration of the system. These individual elements of evidence are then combined to show the conclusion. The combination usually takes the form of an argument: a tree of logic propositions starting with the purpose and devolving hierarchically into many lower-level propositions that can be evaluated using evidence. The process must show that the structure of the argument is both correct and complete in order to justify the final conclusion.

Pragmatically, arguments about meeting purpose usually follow a common pattern, as shown below. The primary argument that the implementation meets the purpose consists of a chain of verification steps. The implementation complies with a design, which complies with a specification, which complies with an abstract specification, which complies with the original purpose. As long as each step is correct, then the end result should meet the original purpose—but at each step there is the possibility of misinterpretation or missing properties, or that the verification evidence at each step is not as complete as believed. In practice this approach leaves plenty of uncaught errors in the final implementation. To catch some of these errors in the chain of verification steps, common practice is to perform an independent validation, in which the final implementation is checked directly against the original purpose.

Some industries, particularly dealing with safety-critical automotive and aerospace systems, add an additional kind of evidence-based correctness argument. This is often called the safety case or security case, and consists of an explicit set of propositions, starting with the top level proposition “the system is adequately safe” (or secure) and showing why that conclusion is justified using a large hierarchy of propositions. The lowest-level propositions in the hierarchy consist of concrete evidence; intermediate propositions combine them to show that more abstract safety or security properties hold. (See, for example, one group’s guidance on writing assurance cases [ACWG21].)

Finally, evidence takes many forms, depending on what needs to be shown. Some correctness propositions can be supported by testing. These typically show positive properties: the system does X when Y condition holds. Some of these conditions are hard to test, and are better shown by analysis or human review of design or implementation. Negative conditions are harder to show: the system never does action X or never enters state Y, or does so at some very low rate. These require analytic evidence, and cannot in general be shown by testing.

I discuss matters of correctness, verification, validation, and the related arguments in Chapter 14.

The model in this chapter provides a way to think and talk about systems work. As a team begins a systems-building project, it will be gathering information or making decisions that can be organized using this model. The model can help guide people as they work through some part of the system. For example, the system’s purpose is reflected in the emergent behavior of the system, which in turn depends on the structure of how components interact. When the system is believed to be complete, the team should be able to verify that all of the relations indicated by this model are defined and correct. Later, as the system needs to evolve and the team makes changes to the system, this model helps them reason about what is affected by some change.

This model of systems provides a foundation for organizing the work that needs to be done to build the system. The next chapter presents a model for this work of building a system or component. The information about one component is represented in a set of artifacts, and there are tasks that make those artifacts. The structure of the artifacts, and thus of the tasks, is based on the model of systems and components in this chapter.

Part III goes into greater detail about each part of this model.

The previous chapter defined what a system is. In this chapter, I turn attention to how to make that system. “Making” includes the initial design and building of the system, as well as modifications after the initial version has been implemented.

Making the system is a human activity. Building a system correctly, so that it meets its purpose, requires a team of people to work together. Building systems of more than modest complexity will involve multiple people, usually including specialists who can work on one topic in depth and people who can manage the effort. It involves people with complementary skills, experiences, and perspectives. Such systems take time to build, and people will come and go on the team. Systems that have a long life that leads to upgrades or evolution will involve people making modifications who have no access to the people who started the work.

This chapter provides a model to organize and name the things involved in the making of a system—the activities, the actors, and what they work with. Later chapters provide details on each part of this model. This model includes both elements that are technical, such as the steps to design some component, and elements that are about managing the effort, such as organizing the team doing the work or planning the work. Note that this model does not attempt to cover all of managing a system project—there is much more to project management than what I cover here.

The model presented in this chapter only serves to name and organize. I do not recommend here different approaches one can take for each of the elements of the model; only attributes that good approaches should have. Later parts of this book address ways to achieve many of these things. For example, the team that is designing a system should have an organization (a desirable attribute), but I do not address which organizational structures one can choose from.

The assembly of all the parts involved in making a system is itself a system. In those terms, this chapter presents the purpose (Chapter 9) of the system-making system and a high-level concept for how to organize the high-level components (Chapter 11) in that system.

This model of making captures the activities and elements involved in executing the project to make or update a system.

The approach used for making the system should:

The making model has five main elements:

The artifacts are the things that are created or maintained by the work to make the system.

The artifacts have three purposes. First, the artifacts include the system’s implementation: the things that will be released or manufactured and put in users’ hands. The artifacts should maintain the implementation accurately, and allow people to identify a consistent version of all the pieces for testing or release. Second, the artifacts are a communication channel among people in the team, both those in the team in the present and those who will work on the system later. These people need to understand both what the system is, in terms of its design and implementation, and why it is that way, in terms of purpose, concept, and rationales. Finally, the artifacts are a record that may be required for future customer acceptance, incident analysis, system certification, or legal proceedings. Those evaluating the system this way will need to understand the system’s design, the rationales for that design, and the results of verification.

The artifacts should be construed broadly. They include:

Artifacts other than the implementation are valuable for helping a team communicate. Accurate, written documentation of how parts of the system are expected to work together—their interfaces and the functions they expect of each other—are necessary for a team to divide work accurately.