Making systems

Volume 2: Life cycles

Richard Golding

Copyright ©2026 by Richard Golding

Release: 0.4-review

Table of contents

Part V: Development methodology and life cycles

What life cycles and development methodologies

are. It covers what goes into a life cycle pattern and how the

patterns relate to other parts of how a project operates. It defines

development methodologies and how these relate to life cycles. The

last chapter lists several example life cycle patterns, which lead

into a comprehensive reference life cycle in Part VI.

Chapter 21: Introduction

11 October 2025

21.1 Purpose

Project operations is about the tasks that people do in the project.

The next chapters focus on project operations, expanding on the

material in Chapter 20. I discussed there how project

operations can be broken down into parts to make it more tractable:

development methodology, life cycle, procedures, planning, and

tasking.

- Development methodology (Chapter 22): The general

approach the project takes to organizing its work, such as whether

to work in iterations or linearly, how large tasks should be, and

whether to work on tasks independently or synchronously.

- Life cycle patterns (Chapters 23 and 24): the sequences of steps and tasks used to

complete various phases of the project, and how those steps and

tasks depend on each other.

- Plan (Chapter 64) and tasking (Chapter 68):

tracking the overall path to completing the project, and making

decisions about who should work on which tasks at what times.

The reasons to organize work include making efficient use of people

and resources, with little wasted work and avoiding idle time, and

ensuring good quality in the final system. Section 7.3.5 and Section 20.1 provide

further details.

The model of work I am using focuses on creating and organizing system

artifacts (Chapter 15). The artifacts are mostly

organized around components, with each component described by a

defined set of artifacts. (A few other artifacts are used in project

operations or project support.) The work is performed as tasks that

take in some artifacts and do work to generate other artifacts.

Operations is about working out the right tasks to be done, then when

to do each one, and keeping going as the tasks change.

The rest of this chapter begins with defining tasks: how most of them

involve building the artifacts that define each component, and how one

artifact builds on information in other artifacts. The project’s

development methodology influences how the work of building the

artifacts is broken into tasks. Building a component includes tasks

for checking work and decision points along the way. The tasks will

include decision points that cut across many threads of development.

The discussion next moves to how the known set of tasks changes as a

project moves along. As the team defines new components, they

implicitly define new tasks to design and implement those

components. As the work progresses, there will be problems to fix or

changes requested, each of which involves more tasks.

Finally, the chapter wraps up with a discussion of how to choose what

tasks to work on when. These decisions can be framed in terms of

uncertainty, and how different choices lead to increasing or

decreasing the uncertainty in the system’s artifacts.

21.2 Tasks

A task is a unit of work that one or more people will perform. The

common understanding of “task” is generally sufficient. The work

creates and updates the artifacts that make up the system.

In Section 20.4, I introduced the general idea of a

life cycle pattern: a set of phases or steps, with dependencies from

one phase to another. Each phase or step is made up of some tasks,

tasks having dependencies among themselves. Phases can have

milestones; milestones are themselves tasks. Tasks are assigned to and

performed by one or more people.

The work done in a task uses some artifacts as inputs and produces or

updates some artifacts as output. The dependencies between tasks come

through artifacts that are outputs and inputs to tasks.

21.3 Defining tasks

The easiest way to understand how tasks are defined is to suspend

disbelief for a bit and pretend that one knows all of the components

in the system. This is, of course, unrealistic: the only time when one

actually knows everything is at the end when the system is

complete. One can begin by looking at all the components and mapping

out the work to be done with full knowledge, and then see how to work

in a more realistic situation where much of the system is not yet

known.

The basic approach to defining tasks starts by working out the system

artifact graph, based on the components in the system, and then

working out the tasks needed to build all those artifacts well.

21.3.1 Component breakdown structure

Defining tasks starts with the component breakdown (Section 11.3). At the end of the project, it names all the

components in the system and shows how one component is a part of

another. The system as a whole is the top level of the breakdown

structure. The breakdown structure will not be complete until late in

the project; components will be added and removed as the work

proceeds.

This example is of a simple small spacecraft. A real mission system

would include many system components in parallel, including ground

systems and launch vehicle.

21.3.2 Artifact patterns

For each kind of component, there is a map of the artifacts involved

and the dependencies between them—how one artifact builds on

previous ones.

Dependencies include tracing: a fact recorded in one artifact is the

reason that something exists in a following artifact. One of the

principles of a good set of system artifacts is that every part of

every artifact can be traced back to a need in the system purpose, and

that every part of the system purpose is realized in implementation

artifacts and verified in other artifacts.

Bear in mind that these are artifacts, not tasks. Tasks make and

update artifacts. One could do a simple mapping from artifacts to

tasks, having one task to make each artifact in turn. In practice that

doesn’t work, as I will discuss in a later section.

The artifacts involved depend on the kind of component though they

have elements in common. All components start with a purpose and end

with verification. Each specific project may use a different variant

of these artifacts, depending on its needs. Here are four

examples.

A simple regular component.

Most components that are developed in house follow this base pattern

of artifacts. It starts with a purpose, proceeds through concept and

specification, then to design and implementation on one track and

implementing and performing verification on another track.

A component supplied by a vendor.

When a vendor designs and implements a component, there are additional

artifacts related to selecting the vendor and the contract with

them. The vendor may be responsible for the component’s design and

implementation, but the project will still maintain a design and

implementation that are received from the vendor.

System as a whole.

The major artifacts for the whole system are similar to those for a

component, with two main differences: records of how the project

worked out the system’s purpose, and validation of the system against

the stakeholder needs.

System built under contract.

A system that is built under a competitive contract to someone else

has additional artifacts (and the order of work is somewhat

different). The project develops a proposal based on a request for

proposals; the proposal typically includes at least the system concept

and some parts of specification or design. If the team is selected,

there is often negotiation that results in a contract. This is

discussed more in Section 25.1.

21.3.3 Mapping artifact patterns onto breakdown structure

This leads to a map of all the known artifacts, the product of the

breakdown structure and the component artifact maps (In the diagrams

that follow, I will use initials rather than the full names for each

artifact in order to manage visual complexity.)

One can make this artifact graph by starting with the component

breakdown structure, which is has a tree structure, and replacing each

component in the tree with its corresponding graph of artifacts. When

a component has subcomponents (including the system as a whole), its

implementation artifact is expanded with the artifact graphs of each

of its subcomponents.

Applying the system and regular component artifact graphs in the

previous section to the small spacecraft component breakdown presented

earlier yields the following system artifact graph. Note how the

patterns recurse, with subcomponent artifacts nesting within component

artifacts.

The number of artifacts grows rapidly, to the point that even for the

components listed for the simple spacecraft—most of which are still

components with further unlisted subcomponents—the graph is too

large to print and view in one piece. Using tools that organize the

artifacts and using common patterns helps people navigate all the

information without seeing it all in one place.

21.3.4 Development methodology and life cycle patterns

The next step is to define the tasks that will make and update the

artifacts. I use development methodology (Section 20.3) and life cycle patterns (Section 20.4) to define patterns of the tasks the make

artifacts. The development methodology defines the general style of

how the project will work: how it chooses to iterate, and how it

prioritizes one kind of work over another. The life cycle patterns

complement the development methodology with clearly-defined patterns

that guide the team through steps of the work.

As I noted earlier, a simple pattern of building each

artifact in turn does not work well: good practice includes reviewing

or checking work at regular intervals, the project will likely have

milestones where decisions must be made, and building in order

(“waterfall methodology”) has known problems.

Basic life cycle patterns.

One life cycle pattern defines, for example, the sequence of tasks

involved in building a component. The basic flow might mirror the

dependencies of that component’s artifacts. The pattern adds reviews

and decision points to the tasks.

The flow in this pattern shows the tasks that are to be performed as

the component’s artifacts are created or updated. When someone

performs one task, changing some artifacts, this triggers the tasks

that follow in the pattern. For example, if someone changes the

design, tasks for the design review, implementation, implementation

review, verification, and acceptance review follow. When the design is

changed, however, the verification design and implementation don’t

need to be changed as so no tasks are performed.

The pattern as drawn does not address versioning and

baselining. Presumably each task creates a version of its output

artifacts, and those versions are baselined after passing the next

review checkpoint. Note that this is similar to the workflow practices

implemented in several software version control systems, where changes

are made on a branch specific to a set of changes and promoted to a

shared branch or the main line through a controlled “push” mechanism

that requires review and approval.

Cross-cutting life cycle patterns.

Other life cycle patterns add milestones or decision points that cut

across multiple components. For example, the project might have a

milestone for demonstrating a plausible technical approach in order to

get funding to proceed to in-depth design. The project might impose a

review and decision milestone to get approval before starting an

expensive and irreversible implementation step, such as starting to

build an aircraft’s airframe.

The flow below shows how an overall system design review (of all

components to the third level) is added to the overall flow of

tasks. A review like this would be used relatively early in a project

to check that there is a plausible approach for building the system;

this is similar to a NASA Preliminary Design Review (Section 31.2.4).

Development methodology.

While the life cycle patterns define in general what tasks need to be

done and how they flow into each other, the patterns do not address

the bigger picture of how to organize work. Should each task be done

one after another? Should multiple tasks be done in parallel? Should

some tasks be done at the same time, with people working together?

The project’s development methodology answers these kinds of

questions. It defines the basic working style for the project.

As discussed in the next chapter (Chapter 22),

development methodologies reflect choices about whether the team works

in feature-by-feature iterations or in one linear flow through the

system; how big iterations are (if used); how far forward planning

looks; and how people working on related parts work together.

One possible methodology—as mentioned earlier—is to proceed

linearly through building a component, performing each task in the

life cycle pattern one after another. This is often called the basic

waterfall development methodology. The illustration above for a basic

component life cycle pattern shows how this works. When the

specification is complete, design tasks can begin. When a design step

is complete, implementation can begin. If the results

from one task do not pass their review, the work repeats parts of the

previous task to address the problems that were found.

This linear methodology is simple, but as many people know well, it

works poorly in practice—and I have never seen a project actually

operate this way. The problem is that each step commits the work to a

particular path, even if the choices made lead to problems later. This

is why the North American transcontinental railroads were built using

multiple steps (Section 4.7): one group would range ahead

finding a rough route through mountains; a second group would follow a

long way behind to survey a specific route; and later groups would

actually build the railway. If the railroad had been built in a more

linear fashion, with construction following not far behind route

planning and surveying, early decisions would have forced it into

crossing high mountains or building long tunnels under them, which was

beyond the capabilities of the time. The alternative would be to

abandon a lot of constructed track way and begin building on a

different route.

Alternative development methodologies provide greater flexibility at

the cost of somewhat less simplicity. They break up the work of

building a component into multiple iterations. The iterations can be

seen as slicing up the life cycle pattern into multiple

repetitions. Each iteration might step through the life cycle pattern,

focused on adding some set of features to the component. The

iterations might not all be identical; concept might be done first,

and iterations only cover specs through verification. Each iteration

has one or more goals for what should be implemented and verified at

the end, with each iteration building on the next until the component

is complete.

An iterative methodology might also include an initial “iteration” for

identifying hard problems, and prototyping or doing trades, before

proceeding to finish specification and starting development. This is

similar to a railroad project looking for mountain passes and feasible

river crossings far in advance of committing to a particular route.

A methodology like this addresses some of the problems with the linear

approach. The second iteration in the example involves working on the

concept, specification, and designs together. This can mean, for

example, refining a first version of the concept by sketching out

specification and designs. While sketching them out, the team learns

about the component and investigate design ideas to see what choices

there are to make, what technologies or subcomponents are available,

and begin looking at safety or security issues. By first sketching

these without trying to make a proper specification or design

artifact, the team can learn about problems that might have caused

problems later. At the end of the tasks in the second iteration, the

team has a concept that is likely to be feasible, and they have notes

or sketches for specification and design. In the third iteration,

they can evolve the specification and design sketches into a first

draft of specification and design artifacts.

Some methodologies also organize work on related components so they

are done concurrently—at least during some iterations. This might be

done to develop and verify integration before building out detailed

implementations, in order to minimize the risk of finding integration

errors late in development when they are more expensive to

correct. For example, two components that communicate over a wired

connection would be designed at the same time, possibly by one person

or possibly by a couple people working closely together. In these

methodologies, one often uses mockups and stubs in place of

functionality that will be designed and built in future iterations.

Spiral development methodologies, on the other hand, break system

development up into large iterations. During one large iteration (one

turn of the spiral) a lot of people focus on some shared functionality

that touches many parts of the system. In one project involving

multiple spacecraft, my team identified several system-wide functional

milestones that built on each other, then worked toward one after

another. We first demonstrated that we had a working development and

testing environment, then built a skeleton of the software

infrastructure, then built some simple applications on that

infrastructure. This approach incentivized people to work together on

shared goals that could be achieved in a month or two, rather than

having everyone work in a different direction.

The whole system development effort can be treated a similar

way. Most projects have milestones that cut across all the work: early

decisions of whether to proceed on the project or not, demonstrations

of work in progress for funders, and so on. These can fit into the

development methodology by treating those milestones like the

endpoints of development spirals.

Summary.

This sequence shows how one can start with a component breakdown

structure then apply life cycle patterns and a development methodology

to work out the tasks that the project will be performing.

This first step has been unrealistic because it is written as if all

the components are known at the beginning, and that nothing

changes. In the next section I discuss how to handle discovering the

component structure and adapting to changes.

21.4 How work grows and changes

In reality, one doesn’t know all a project’s components or milestones

at the beginning. They are discovered bit by bit as development

proceeds. More work is discovered as problems are found that need to

be fixed, or as stakeholders ask for changes.

At any given time, only so much of the system’s structure has been

worked out. People will know only a few artifacts that are needed

early in the project, and over time they will learn the rest of the

system structure.

To understand how the view of the component breakdown and associated

artifacts changes, consider four scenarios:

- Discovery of system structure as design proceeds;

- Needed rework, because of resource negotiation;

- Needed rework, because of design or implementation problems; and

- Change requests.

System structure discovery.

Discovery works generally downward and outward from what is already

known. Looking at a component breakdown structure early in the

project, one might see the top level system and a few of its first

level components; some of the first level components might have some

of their subcomponents identified; the rest of the system would be

unknown. Work would then add more first level components, flesh out

their subcomponents, and begin to work out relationships among the

components (Chapter 12). Over time all the components and

their relationships get identified.

This means that the high level structure—the top couple of layers of

components in the breakdown structure and their relationships—are

defined before the details of all those components are worked out. The

high level structure is expensive to change once it has been

established and other components are defined in its terms. This means

that it’s worth spending some time sketching out, modeling, or

prototyping some of the key parts of the high level components before

committing to their structure.

Consider the evolution of the design for an electrical power system,

or EPS, for a small spacecraft. It will be known from the beginning

that some kind of EPS is needed—after all, all of the avionics need

power. Many of the objectives and constraints are known early on as

well, such as the size and volume of the spacecraft, rough mass

limits, rough power needs of different subsystems, the mission’s

approximate orbital geometry leading to estimates of how much time in

sun and how much in eclipse. Several of these will be negotiated and

refined as the project goes on, so changes will happen.

After this first step, the high level spacecraft design can be

committed, or baselined: the spacecraft will definitely need an EPS

and further work can proceed, assured that there will be one. The

design of the EPS and the specific demands on it are still unknown,

but it is now reasonable to proceed to work to discover that next

level of information.

When the time comes a little later to design the EPS, it can then be

broken down into a few components, following a common pattern for

small spacecraft. It will include solar panels for generation,

batteries for storage, a power distribution unit to control how power

is used, wiring to move energy around, a safety mechanism to disable

all power until the spacecraft has been deployed, a mechanism to

permanently drain and disable power, and various sensors.

This collection of components is initially a tentative proposal. The

rough design is not yet ready for people to put in greater effort

because there are likely many open questions: Are there appropriate

parts available? Can the approach meet general requirements? (Launch

safety requirements are a common source of EPS complexity.) Can the

EPS likely provide the power needed to run spacecraft systems? The

team investigates questions like these, typically including modeling

the EPS, looking for parts or suppliers, and constructing prototypes.

As these proposed components are tentatively added to the system, they

add to a tentative version of the component breakdown structure. This

implies that the artifacts involved in those components are

tentatively added to the system artifact graph, and the lifecycle

patterns apply to create a number of tentative tasks. The result is a

large number of tentative artifacts and tasks.

Needed rework due to resource negotiation.

Many systems will have some resources that must be shared among

multiple components, and the demand from the components must be met by

the supply of the resource. Mass, power, bandwidth, and space are

common shared resources. A maximum mass provides a constraint on the

sum of the masses of all components. The power available at different

times must be sufficient to meet what electrical components will use;

the ability to generate electricity and the demand for it change as

the system goes through different activities. The demand for data

transmission is constrained by the capacity of communication

channels. Physical parts must be able to fit within the volume

available for them. Chapter 45 goes into more detail about

these kinds of resources.

Because the resources are shared, a change to one component can affect

the others. A component may be designed to meet its specification for

a particular maximum mass, for example, but if another component is

over mass the properly-designed component’s specification (and thus

design and implementation) may have to change to meet the shared

constraint.

Consider a system that has fifteen components and a constraint that

the overall mass must be less than . Each component is given a

specification of its share of mass, . The shares are set so that

. (Best practice is to reserve some fraction of

as margin, to absorb estimation errors.) As component designs proceed,

three components come in over their budgeted share, two well under

their share, and the total is more than . The team needs to find

savings in some to components bring the total below the maximum. This

involves negotiating among people responsible for the different

components; the team investigates alternate designs that might reduce

mass. The end result is that the specifications for some components

will change to give them a lower share , and some overweight

components may get a larger share.[1]

The tasks involved can be expressed in a life cycle pattern. The

pattern starts with a task to identify and report on the resource

problem, followed by investigating and negotiating ways to change

resource allocations. The investigation is usually done by people

handling multiple affected components working together, not

independently. Once the team has found a new set of allocations, it

gets reviewed. Once approved, the specification for each affected

component is updated and the pattern of tasks for building the

component is reiterated. This means that designs and implementations

are changed, reviewed, and verified.

During the investigation and negotiation, the team creates several new,

tentative versions of component designs. These are typically rough

concepts or designs, not fully worked out. They are

hypothetical; they represent some way that the team might change

designs to reduce resource usage. Some of the alternatives will be no

better than the current approach. Some alternatives may use less

resource, but come with a tradeoff, such as changing some

functionality or moving it to some other component.

It helps the team if they keep track of these hypothetical designs,

along with the rationale of why an alternative might be an

improvement. If the alternative involves a potential change that will

affect other parts of the system, it is helpful to keep track of both

how the design changes the component’s specification and what it does

to other components. The team uses this information when choosing

the new approach for all affected components. They also use this

information to update each component’s specification and design.

Needed rework, because of problems found.

No real team is always perfect; people will find problems in work that

has already been done. A project should have a general approach for

how people handle finding and fixing such errors.

Dealing with a problem begins with detecting that the problem

exists. This might come from observing an error during verification;

it might come during a review of a design or implementation; it might

come when interpreting a specification to build a design or

interpreting a design to implement a component. The people who found

the problem create a problem report artifact to record what they have

found.

The next step is to investigate to determine where the sources of the

problem lie. This might be within the implementation of one

component—the easy case—but it might reflect a specification or

design flaw crossing multiple components. The example of the Mars

Polar Lander loss discussed in [Leveson11, Chapter 2] shows a

case where individual components behaved according to their

specification, but the components were not mutually consistent. In

that case, a sensor could produce transient signals that led the

control system to conclude that the spacecraft had landed and thus

shut down propulsion; in fact it was still at some altitude and thus

crashed. This example shows where a problem results from an incorrect

emergent behavior, and and the “cause” is distributed across multiple

components.

Following the investigation, there is a decision about how to fix the

problem. This might include changing the specification of one

component, and changing the designs of a couple others. The decision

may include evaluating multiple possible ways to solve the problem

before deciding. The evaluation might include prototyping fixes and

testing them.

Once decided, the steps to make the changes follow. If the decision is

to change a component’s specification, this leads to changes in the

design, implementation, verification design and implementation, and

eventually verification of that component against the revised

specification.

This sequence of events can be divided into two parts: a first part

involving detecting a problem and deciding on a fix, and a second part

that is a reiteration of the steps involved in making or updating

components. The investigation and decision part involves some new

artifacts: records of the problem report, of the analysis, and of the

decision. The second part involves updates to existing artifacts,

starting with whatever artifacts need to be fixed and then following

the normal life cycle pattern for building the affected component. The

overall flow is similar to that for handling resource overage.

Note that making a change to one component’s artifact usually leads to

updating artifacts that follow from it: changing a specification means

changing design and verification approach to match. Changing a design

means changing implementation. Changes to implementation lead to a

need to verify that the component is still correct; this

re-verification may continue up through several levels to ensure that

the changes integrate properly with other parts of the system. These

artifact changes also mean that tasks like performing reviews or

getting approvals will need to be repeated, depending on the

associated life cycle patterns.

Many times when I have received a software problem report, the problem

has been fairly simple. There has been a simple typo in a user

interface message, or an off-by-one error in a computation. It hasn’t

required a lot of investigation or decision-making about how to solve

the problem; it’s a few characters changed in one line of source

code. This leads to an abbreviated version of the task flow above: get

the report, look at the code, fix the one line, check that it works,

done. The tools and processes involved should make easy situations

like this easy to handle. In software development, many of the tools

streamline these processes: a problem report system connects with a

version control system, and the version control system enforces

getting reviews and approval before a version is baselined.

At the other extreme, some problems are complex enough that they will

need careful analysis first, and implementing fixes will require

several people to work together. A simple mapping of the artifacts

involved to tasks to update each one doesn’t reflect that some changes

have to be done together: co-designing changes to components that

interact, for example.

Change requests.

When a customer or other stakeholder changes what they need, the

system may need to be changed as a result. A request for a change is

not an indication that there is a flaw in the system, but only a

request for something different than what they asked for

before. Changes don’t always happen just because a stakeholder asked;

sometimes the project decides not to make the change.

Change requests follow a similar flow to the one for fixing

problems. Someone realizes the desire for a change, and creates a

change request artifact. The team evaluates the change request to

determine whether to investigate the request or not. They then

investigate the scope of the change to learn how much work it might

be to build; this work often includes (at least informally) creating

new versions of the system purpose and concept. The investigation also

includes updating analyses to see if making the change will cause

problems for other stakeholder needs—for example, requiring more

investment from funders to make the change, or interfering with safety

or security needs.

The reporting and investigation steps record information in a number

of artifacts:

If the team then decides to pursue the change, they

begin to do tasks that update the system purpose and concept, flow

into system specifications, and so on down to affected components.

Note that by definition change requests from stakeholders only involve

changing the system as a whole. The internals of the system are the

project’s responsibility, and stakeholders only know about what’s

inside when they need to perform some validation activity. For

example, certification of an aircraft validates that its design and

implementation meet airspace regulations. The regulator only gets to

specify the standards an aircraft must meet to be considered

acceptable, and on validation they can find that the design does not

meet those standards, but the regulator cannot request a change to a

specific component’s design (though it may sometimes seem like they do

that).

21.5 Managing artifacts

Tasks are for generating output artifacts based on input

artifacts. That is, artifacts are the means of communicating

information between tasks.

All the work output—that is, artifacts—needs to be stored and

maintained to be useful. Those who need a particular artifact as input

need to be able to find it, and know what to do with their

results. Other people later need to find all those artifacts to check

the work or to make changes as the system evolves.

People often work on multiple versions of an artifact while they are

working on a task. Someone builds up the requirements for a component

step by step until they believe the requirements are complete. Two

people might work on two separate alternative designs for a component,

and eventually the project decides which design artifact will be

used. Two other people might collaborate on a component

implementation, sharing updates as they build the component. A group

of people might be building component implementations while someone

else looks into a change request that will change some of the

components’ specifications, and thus designs and implementations. In

all these cases there can be multiple versions of artifacts, with some

of the versions tentative works in progress while other versions are

settled.

When there are multiple versions, people need to use the right version

for their task. Two people working together on a component design want

to be sharing the same in-progress version. Someone implementing a

component, on the other hand, wants to use the current, approved

design version—not an out of date version, and not some work in

progress that is constantly changing.

More generally, the project can have multiple versions of some

artifact at any given moment. Some of these versions will be works in

progress; others will be tentatively completed but not yet reviewed

and approved. One version might be the stable baseline version. Some

time later the baseline version might be replaced, and the old

baseline becomes obsolete.

The distinction between artifact versions that are tentative and those

that are committed or baselined is how much others can rely on the

state of the artifact remaining stable. If artifact A is stable, then

someone can use it when working on another artifact B without risking

that the work on B will become obsolete because A changes. Using other

words, the distinction is based on the uncertainty in A.

Uncertainty is measured in varying degree. One thing can be more or

less uncertain than another; almost nothing will be completely certain

and few things will be completely uncertain.

An artifact version’s status as tentative or baselined, on the other

hand, is a binary condition. The status thus obscures some of the

information about a version of an artifact. The status does, however,

reflect a decision: whether the uncertainty in a version is good

enough for others to work from or not.

Many artifacts need to be consistent with each other. For example,

consider the designs of a set of components that work together. Their

designs aren’t complete until they are consistent so that each

implements the behaviors that others need. If one component’s design

changes, the other designs must be at least checked and maybe changed

to fit how the one component has changed. To support this, a group of

artifacts can be a work in progress, tentative, baselined, or

obsolete.

This is similar to techniques used in software version control. In

these tools, people make changes to local copies of files, then check

the changes in to working branches. The working branches are a view

of a new tentative system version. At some point the contents of

working branches are pushed to a master branch, representing the

committed (baselined) information that everyone is working with. There

are usually several independent tentative versions (working branches)

being developed in parallel, and they will need to be reconciled at

some point. The tools enforce checks and reviews before changes are

merged into the master branch (baselined or committed).

While the versions can be managed by hand, today almost all projects

use automated tools to coordinate and store artifact

versions. Software version control systems and document or

configuration management systems provide tools for tracking which

versions are available and what their states are.

21.6 Uncertainty and choosing tasks

All of these ways that systems change share something in common:

unknowns. While a system is being designed, parts of it will be

unknown. When resources need to be traded or when problems need to be

fixed, it is not known how the situation can be resolved. Before parts

of the system have been verified, there will be flaws, but what and

where are unknown.

I have discussed how new work is tentative while it is in

progress, and at some point the work is baselined so that others can

use that work. Promoting an artifact from tentative to baseline depends

on whether that artifact is likely stable enough that changes aren’t

going to come along too often, causing additional work on the

artifacts that depend on this one. In other words, being baselined

depends on how much is still unknown about the artifact.

These aspects of work are reflections of uncertainty. Uncertainty

about an artifact is a measure of what is not yet known about it, or

what changes may happen in the future.

Uncertainty matters because the areas of uncertainty are where work

will be done—and because the amount of work is hard to estimate.

Uncertainty is a normal and inescapable part of building a

system. After all, at the start of a project nothing is known about

the system that will result except a vague idea of what it might be

for. System-building can be viewed as a process starting with

everything uncertain, then step by step resolving uncertainties over

time as more and more artifacts are understood, designed, and checked.

At the end of a project, when a system has been implemented, verified,

and accepted, there are by definition no uncertainties left. The

challenge is to deal with uncertainties in a way that resolves the

most important ones early and keeps the team’s work from becoming

chaotic.

Uncertainty takes many forms. Sometimes it is just something that

hasn’t been built yet, like implementation of a component. Sometimes

it is something deeper, like what some set of components should do

or how some key part of the design might work. Sometimes it is

whether things will pass verification, especially whether parts will

integrate together properly and produce the desired emergent

behaviors. Many forms of uncertainty fall into four categories:

unknown content, unknown feasibility, unknown errors, and unknown

integration.

Kinds of uncertainty.

There are many things that can be unknown, and some of them will be

“unknown unknowns”—things that one isn’t even aware yet that the

things will be needed.

- Unknown content. These are artifacts, or parts of artifacts, that

haven’t been built yet. For example, it may be clear that a

spacecraft’s power system needs energy storage, but when it hasn’t

been specified or designed, that part of the power system is

unknown. Or the design of the energy storage may be started, but it

has not yet been completed because the safety-related requirements

haven’t been worked in or evaluated yet. This is a kind of known

unknown: it can be clear when part of an artifact hasn’t been

developed yet.

- Unknown feasibility. This occurs when part of one artifact has been

developed, and it creates a situation where some dependent artifact

cannot be developed. In the spacecraft power system example, the

specification may have been written to include a maximum mass

constraint and a minimum energy storage amount. When the

energy-per-mass requirement in that specification is greater than

the capacity of any available storage technology, that specification

is not feasible. For another example, the design of the battery and

its connectors and wiring might meet specification, but be

impossible to manufacture because the wiring is buried inside some

structure. This is a kind of uncertainty that one can suspect

without knowing that it’s there.

- Unknown correctness or errors. A component can get through design

and implementation, but until it has been verified nobody knows

whether it actually works. After an implementation passes

verification, there still may be errors because verification is

a probabilistic evaluation: it can catch many problems but often

there is no guarantee that it will ensure that the component will

behave correctly in every situation. (Some analytic methods can

reach this level of assurance.) Witness the number of flaws found in

well-tested software, for example. When verification steps have not

been defined or verification has not been completed, the correctness

is a known unknown. Verification then leaves some amount of unknown

unknowns for the scenarios that have not been checked.

- Unknown integration. Two components can be designed and implemented

correctly to their specifications, but not interact correctly. When

they are designed, implemented, and verified individually, it is

still uncertain whether they will work together. In my experience,

many expensive errors in systems come from problems with

integration. Interacting components work together to create

emergent behaviors (Section 12.4), which arises

from the combination of their separate behaviors. These emergent

behaviors are verified at higher levels, either by analysis of the

components together or by testing them together. Until the

higher-level verification steps have been defined and performed, it

is uncertain whether the combination of components will have

flaws. As with verifying a component in isolation, the verification

steps not completed are known unknowns, while unknown unknowns can

hide in the set of conditions not verified.

Effects.

Uncertainty can lead to problems when people try to build on something

that is uncertain, which is why an artifact is not baselined until the

uncertainty is low enough. When a specification is uncertain, people will

design the wrong thing, and if they proceed to implement that design

the amount of work that will need to be retracted and redone

increases.

The team can use information about uncertainty to guide decisions

about where to spend effort. Consider an artifact A that has some

uncertain aspects, and an artifact B that depends on it. Where should

effort be spent? This is an example of unknown content.

One possibility is to focus effort on completing A before working on

anything in B. This would likely reduce the chances that something in

B will get built that doesn’t match A after it has been completed. For

example, if the specification for a component is only partly done, the

design of that component could go off in some strange direction that

doesn’t meet completed specifications. This is an example of how

uncertainty in one artifact leads to uncertainty in its dependent

artifacts.

However, what if there is someone available who can work on artifact

B—the component design? They will be sitting idle until A is

complete. A second possibility for spending effort is to have someone

work on those parts of B for which A is fairly certain, having

informed them what aspects of A are not yet complete. If a component’s

specification is incomplete, the next person might work on parts of

the component design that won’t be affected by the missing parts of

the specification.

If one person works on artifact A to completion and then moves on to

artifact B, it is uncertain whether it is possible to build artifact

B. For example, someone might work through a rigorous and complete

specification for a component—where the specifications call for

something impossible with current technology. This is an instance

where it is uncertain whether artifact A, the specification, is

feasible rather than just about not being completed. To avoid these

kinds of problems, people often do some investigating about design or

even prototyping before committing to a specification.

Also when someone completes work on artifact A, what are the chances

that there are errors as yet undetected in that artifact? A

specification might have an inconsistency or a missed requirement; a

design or implementation something similar. The uncertainty about

correctness leads to uncertainty about how much rework or fixing will

be needed.

A final problem comes with integration: when multiple components are

being built independently, they often will not interact properly when

put together—that is, will not lead to the desired emergent behavior

of the components put together. This is an example of uncertainty

about integration, rather than uncertainty about content, feasibility,

or correctness. Teams often address this kind of uncertainty by

co-designing components that will interact, or by mocking up parts of

components so the interaction can be checked before investing in

finishing design and implementation of other parts of those

components.

Estimation uncertainty.

The uncertainty discussed here is related to uncertainty in estimating

cost or schedule [McConnell09, Chapter 4]. Note that this is the

aggregate uncertainty over the whole project’s schedule or cost, not

the uncertainty in specific parts of the work. Estimation practices

have developed the cone of uncertainty: the way in which the

uncertainty about estimates changes over time as more of the system is

worked out, and requirements (specification) and design are

completed. Studies based on historical experience show that the cone

of uncertainty has a 4x range of variability for a well-run software

project.

While estimation uncertainty is not the same as artifact uncertainty,

there are lessons to learn from estimation. The cone of uncertainty

only narrows when the project is well run (the solid lines in the

graph above). McConnell gives examples of the kinds of development

process mistakes that lead to uncertainty staying high (or

increasing), including failing to work out specifications well, having

unstable requirements, poor design and implementation problems that

lead to errors to fix, and failing to plan the work. Unfounded

optimism about the team’s capability or progress and bias about

uncertainty often cause problems with estimation. These can lead to a

project where the uncertainty does not converge to zero, or even

increases (the dashed line in the graph). Similarly, the uncertainty

in system artifacts will only decrease in general if the project is

run to avoid these kinds of problems.

Ideally, one would like able to measure uncertainty, but it’s

generally not possible. Estimation practices for well-understood kinds

of projects, such as developing software of a particular kind, can

establish a likely lower bound on variability—but that is different

from being able to say what and how much uncertainty exists in a

specific artifact, or providing a bound for a new kind of system.

Uncertainty thus has to be treated qualitatively. One can usually say

that some component has a little uncertainty, medium, a lot, or

complete uncertainty. (I have sometimes called this “how scared am I

about this?”) That’s enough for making good enough decisions about how

to direct work effort.

Note that the known uncertainty can be quite different from the

actual uncertainty. Consider two points in time, one a couple weeks

into a project and another two months later. At the first time point,

the team will still be discovering stakeholder needs and starting to

work out the system purpose and concept. There are probably a small

number of large uncertainties then: what the system will be like is

unknown, and there may be some indication of particular capabilities

that may require unknown solutions. Two months later, the team might

be will into the system concept and the number of needed technology

solutions will have ballooned. It will likely feel like the project

has become far more uncertain in those months. In fact all those

unknowns were always there but hadn’t been discovered yet; they hadn’t

gone from unknown unknowns to known unknowns.

Using uncertainty.

I will discuss in a later chapter (Chapter 22) how to

use uncertainty to help guide a project’s work. In short, putting

effort where uncertainty is greatest is often a good heuristic for

choosing what to work on. Where the greatest uncertainty lies changes

over the course of a project: early on, it is in developing an

understanding of what the purpose and concept are (unknown

content). Later, it moves to working out some of the key decisions

about system structure and key enabling technology (unknown content

and feasibility). As work progresses into design and implementation,

the ability of the high-level parts of the system to integrate

together become the most important uncertainty (unknown integration).

The choices for development methodology and life cycle patterns

reflect how a project chooses to address uncertainty. The heuristic of

focusing on greatest areas of uncertainty affects the choice of

development methodology, as I will discuss in the next chapter. It affects how the life cycle patterns include

checking for uncertainty (or its resolution) in the flow of tasks for

building parts of the system.

21.6.1 Uncertainty versus risk

Uncertainties are different from risks (in the project management

sense).

A risk is generally seen as “the potential for performance

shortfalls, which may be realized in the future, with respect to

achieving explicitly established and stated performance requirements”

[NASA16, p. 138]. Risks, in this sense, are fundamentally

potential: they may or may not happen. Many risks are external to

the system being built: the risk that the project will not get

funding, the risk that a vendor will not deliver a component on

schedule. Risks are generally categorized by the likelihood and

consequence of occurrence; they are defined in terms of scenarios that

could happen.

An uncertainty, on the other hand, is a lack of knowledge about part

of the system or its artifacts: now knowing how to design a component

to achieve some behavior, or not knowing what will be in a

specification artifact. An uncertainty about a system artifact has in

fact happened, even if no one is yet aware of the lack; it is not a

potential lack of knowledge. The consequences of that lack may or may

not be predictable.

Uncertainty and risk are certainly closely related. It is uncertain

whether a risk’s situation will occur (and thus result in the risk’s

consequence). An uncertainty will lead to a consequence, even if that

consequence is only doing the expected work to complete something.

A team handles uncertainty and risk differently. Uncertainty is

handled by working through parts of artifacts where there is

uncertainty—prototyping, analyzing, or just doing the work to build

and check the artifact. Handling uncertainty is thus a matter of the

ordinary work of the project.

Handling risk, on the other hand, is a matter of planning for the

potential events. It is like handling safety or security: one starts

by working out what consequences (harms) one wants to avoid, then

working out the scenarios or conditions (hazards and environmental

conditions) that will lead to an event where the consequences happen

(accident). One then works out steps the project can take to reduce

the likelihood or severity of the consequence if it does occur.

Chapter 22: Development methodologies

17 April 2025

22.1 Purpose

A development methodology is the overall style of how a project

decides to organize the steps in developing the system. This includes

decisions like whether to develop the system in increments of

functionality, whether to design everything before building, whether

to synchronize everyone’s efforts to a common cycle, and so on. These

decisions are reflected in obvious ways in the life cycle patterns a

project uses.

There are many methodologies named in the literature: waterfall,

spiral, agile, and so on. Different sources interpret each of these

differently, and they are rarely compared on a common basis. Some of

these, like waterfall methodology, have evolved over time and do not

have a single clear source or definition. Others, such as agile

development, have a defining document (manifesto) to reference.

All of the methodologies I know of have come to be treated as dogma,

and are more often caricatured than treated thoughtfully. This is

unfortunate because each of the methodologies has something useful to

offer, while all of them are harmful to project effectiveness if taken

as dogma or used without thoughtful understanding.

22.2 Characteristics

These methodologies can be organized and compared based on a few

characteristics. A project can choose a methodology with the

characteristics it needs.

Size of design-build cycle.

Methodologies like waterfall use “big design up front”, where the

entire system is specified and designed before implementation

begins. Other methodologies break up development into many

specify-design-implement cycles.

Size of design-build cycle.

The argument for doing as much design up front as possible is that

errors are easier and cheaper to catch and correct before

implementation than after. The arguments against are that in some

complex systems the design work is exploratory and requires

implementing part of the system to learn enough to know how to

design—or not design—critical system parts.

Many iterative methodologies claim to be better at supporting

adaptation as system purposes change.

Coupled or decoupled design-build.

Some iterative methodologies plan to complete adding a feature to the

system in one iteration, by executing an entire

specify-design-build-integrate cycle for that feature. Other

methodologies break up that cycle into multiple steps, and allow those

steps to spread across multiple iterations.

Coupled or decoupled design-build.

Advance planning.

Some methodologies emphasize planning out work activities as far as

possible into the future, while others focus on planning as little as

possible in order to adapt as needs change.

Planning to different horizons.

The argument for planning as far as possible into the future is that

it gives the team stability: they have a reasonable expectation of

what they should be working on now and have a sense of how that work

will flow into other tasks soon after.

The argument for planning to shorter horizons is that someone will

come along and change priorities or system purpose, and so the work

will need to be changed to adapt. Planning too far ahead is wasted

effort, it is argued, and gives teams a false sense of stability.

Regular release or integration.

When a methodology uses many design-implement cycles, at the end of

each cycle it can require that new implementations be integrated into

a partially-working system, or it can go farther and require that the

partially-developed system be releasable. Most iterative methodologies

recognize that very early partial systems may not be releasable

because they are too incomplete.

Regular release is feasible for products that are largely software,

where a new release can be put into operation for low effort. It is

less feasible for products that involve a large, complex hardware

manufacturing step between development and putting a system into

operation.

The choice of whether to release regularly or not is often dictated by

the relationship with the customer(s) and whether the system is still

being implemented the first time, or is in maintenance. Once the

system has been deployed, development is likely either for fixes or

for new features; these are often released and deployed as soon as

possible.

Synchronization across project.

Some methodologies that break up development into multiple iterations

align all the work being done at one time so that the iterations begin

and end together. Other iterative methodologies allow some work

iterations to proceed on different timelines from other work.

Synchronized versus unsynchronized tasks.

Synchronizing work iterations across the whole project can provide

common points to check that work is proceeding as it should and to

share information about progress. However, it can also break up

tasks that run far longer than others and result in a perception that

the synchronization is wasteful management overhead rather than

something useful.

Shared short-term purpose across project.

Iterative methodologies can focus the entire team on one set of

features across all the work going on at one time, or they can allow

different streams of work to have different focuses in the short term.

The argument for this practice is that the more people share a common

goal, the more they will be motivated to work together to meet that

goal and to defer work that does not address that common goal. The

argument for having multiple work streams with different focuses is

that too often a project will involve work from different specialties

and on different timelines: mechanically assembling an airframe and

building a flight control algorithm have little in common.

Shared purpose.

22.3 Commonly-discussed methodologies

I present three of the most commonly discussed development

methodologies in order to illustrate how they can be

characterized. Each of these methodologies has many variants, and all

are the subjects of debates comparing tiny details of each

variant. The purpose of this section is to illustrate how they can be

analyzed, not to capture all nuances of every methodology in use.

Summary of common development methodology characteristics.

Waterfall development.

This approach to development follows the major life cycle phases in

sequential order. It begins with concept development, moves through

specification to design, and only then begins implementation.

Waterfall development is well suited to building systems that have

decision points that are difficult or expensive to reverse. The NASA

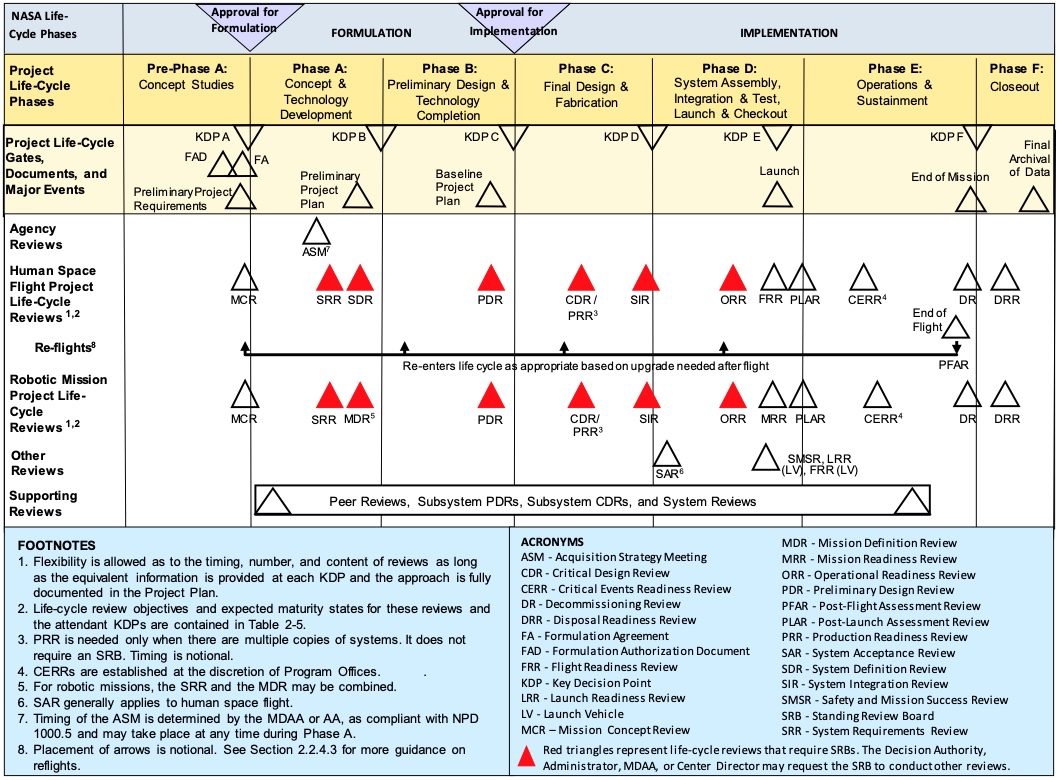

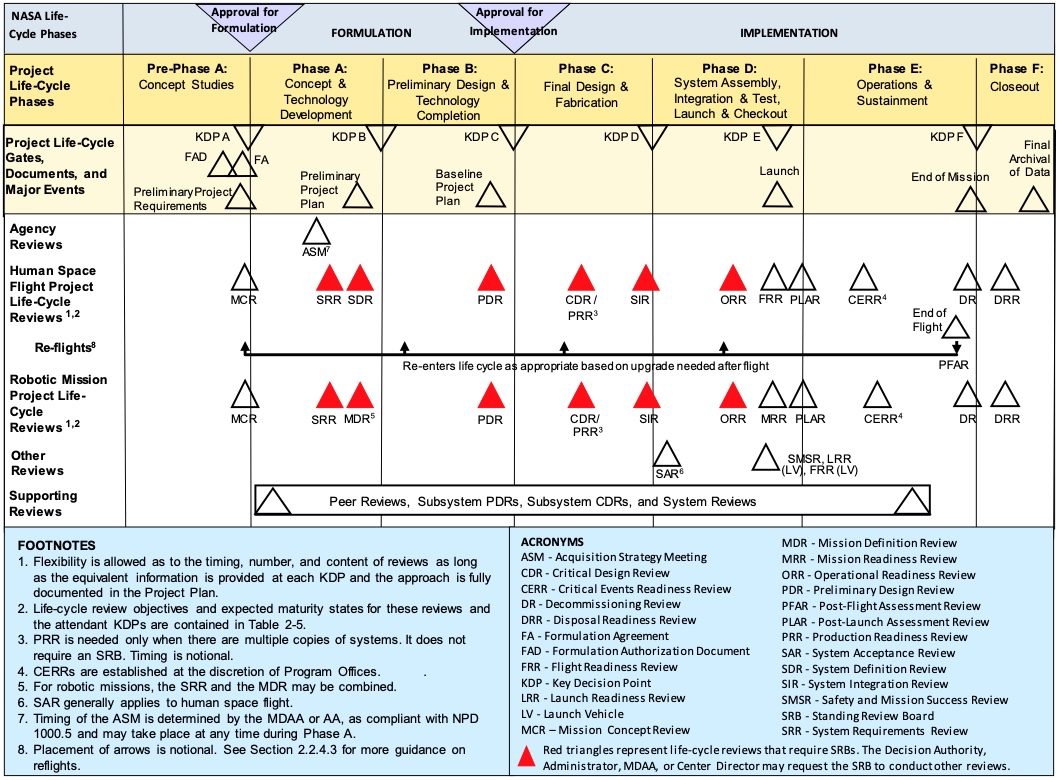

project life cycle (Section 24.2.1) follows a

waterfall-like sequence for its major phases because there are three

decision points that do not allow for easy adjustment: getting

government funding approval; building an expensive vehicle; and

spacecraft launch.

This methodology can be inefficient when the system cannot be fully

specified up front. When the system’s purpose changes mid-development,

or when some early design decision proves to have been wrong, the

methodology does not have support built in for how to

respond. Projects using this kind of methodology are known to have

difficulty sticking to schedules and costs that were developed early

in the project, usually because some unexpected event happened that

was not anticipated from the beginning.

In one spacecraft design project I worked on (Section 4.1),

the team assembled a giant schedule for the whole project on a

20-foot-long whiteboard. This schedule detailed all the major tasks

needed across the entire system. That schedule ended up requiring

constant modification as the work progressed.

Waterfall development requires great care when building a system with

significant technical unknowns. The serial nature of execution means

that some important decisions must be made early on, when little

information is available on which to base that decision. When those

unknowns are understood, the project can put investigation or

prototyping steps into the specification or design phases in order to

gather information for making a good decision. On the other hand, if

the team does not learn that some technical uncertainty exists until

the project is into the implementation phase, the cost of correcting

the problem can be higher than with other methodologies. In addition,

the sequential nature of execution can create an incentive for a team

to muddle through without really addressing the unknown, resulting in

a system that does not work properly.

In the spacecraft design project I mentioned, there were technical

problems with the ability for spacecraft to communicate with each

other. These problems were not properly identified and investigated in

the early phases of the project. As the team designed and implemented

parts of the system, different people tried to find partial solutions

in their own area of responsibility but the team over all continued to

try to move ahead. In the end the problems were not solved and the

spacecraft design was canceled.

Iterative and spiral development.

This development methodology is characterized by building the system

in increments. Each increment adds some amount of capability to the

system, applying a specify-design-build-integrate cycle. Typically the

whole team works together on that new capability.

Early increments in such a project often build a skeleton of the

system. The skeleton includes simple versions of many components,

along with the infrastructure needed to integrate and test them. Later

increments add capabilities across many components to implement a

system-wide feature.

Teams using iterative development often plan out their work at two

levels: a detailed plan for the current iteration, and a general plan

for the focus of the iterations that will follow.

This methodology provides builds in more flexibility to handle change

than does the waterfall methodology.

Iterative development can be used to prioritize integration (Section 8.3.2), in order to detect and resolve problems

with a system’s high-level structure as early as possible. This

involves integration-first development, where the team focuses on

determining whether the high-level system structure is good ahead of

putting effort into implementing the details of the components

involved.

Agile development.

The agile methodologies—there are many variants—focus the team on

time-limited increments, often called sprints. The approach is to

maintain a list of potential features to build or tasks to perform

(the backlog). At the beginning of a sprint, the team selects a set of

features and tasks to do over the course of that sprint. By the end of

the sprint, the features have been designed, implemented, verified,

and integrated into the system. In other words, there is a life cycle

pattern that applies to building each feature within a sprint.

Agile development aims to be as responsive to changes as possible. The

start of each sprint is an opportunity to adjust the course of the

project as problems are found or the team gets requests for

changes. The agile methodologies arose from projects that were trying

to keep the customer as involved as possible in development, so that

the team’s work would stay grounded in customer needs and so that the

customer could give feedback as their own understanding of their needs

changed.

At their worst, the agile methodologies have been criticized for three

things: an excess of meetings, drifting focus, and difficulty

handling long-duration tasks. Note that these critiques come from

people in teams who claim to be using agile methodologies, and reflect

problems with the way teams implement agile approaches and not

necessarily problems with the definition of the methodology itself.

Agile development emphasizes continuous communication within a

team. In practice, this can lead to everyone on the team having

multiple meetings each day: daily stand up meetings, sprint planning,

sprint retrospectives, and so on. This likely comes from teams using

meetings as the primary way to communicate, and from democratizing

planning decisions that could be made the responsibility of fewer

people.

Some agile projects have been characterized as behaving like a

particle in Brownian motion: taking a random new direction in each

iteration or sprint. This can happen when the team only looks at its

backlog of needed tasks each iteration, or when new outside requests

are given priority over continuing work. The focus on agility and

constant re-evaluation of priorities can lead teams to this behavior,

but it is not integral to the ideal of agile development. A team can

develop a longer-term plan and use that plan as part of prioritizing

work for each new sprint.

Finally, many complex systems projects involve long-running tasks that

do not fit the relatively short timeline of sprints or

iterations. Acquiring a component from an outside vendor or

manufacturing a large, complex hardware component do not really fit

the model of short increments.

22.4 Practical considerations

Most projects actually choose to use a hybrid among the different

methodologies. They may start from one of the generally available

methodology definitions, but they adapt that template based on the

needs of their project and their own experience. Projects often follow

different methodological approaches for different parts of the work:

the early work on stakeholders and purpose is often linear, with later

work done iteratively, for example.

In practice, the projects I have seen that have been successful have

applied common sense to the choices they make about how they chose the

design methodology for their specific project.

I have several general recommendations for making the choices about

what methodology to follow.

- The methodology should promote efficient and good quality work. This

means, in part, minimizing errors and rework. Forcing the pace to be

too fast can push people into sloppy work, not taking enough time to

think something through. Slicing up the work into pieces that are

too small can lead people not to consider the big picture of their

work.

- The methodology must be understandable by the team so that they all

work in the same, consistent way.

- Where possible, the methodology should give a focus to groups of

team members, rather than having each person working on something

unrelated, in order to foster a sense of shared responsibility and

to encourage communication.

- The methodology should provide a general, steady direction to the

work, so that team members are only rarely given drastic changes of

direction or assignment, and so that everyone both inside and

outside of the team gets an accurate sense of how much progress is

being made. A methodology like this promotes confidence that the

work is following a plan and that it can reach a successful

end. This typically means having a plan for the project’s work and

using that to guide decisions about what to work on (Part XIV). The development methodology includes steps for keeping

the plan current.

- The methodology should allow for multiple unrelated tasks to proceed

in parallel when there are people available to do so and work that

can be done separately. The amount of time that some team members

sit idle waiting for others should be as small as possible.

- The methodology should handle tasks being of different

lengths—some of them running for months, some for weeks, some for

days—without penalizing people working on different size tasks. I

have been on teams that held weekly status meetings, where the

people working on short tasks could report something exciting each

week but those working on months-long tasks would just report

“continuing to work on the task” each week. Those who had news each

week got greater attention and approval from other team members than

those working on long-duration tasks.

- The methodology must not impose a meeting workload or management

workload that detracts from actually being able to get the work

done. Many projects that have adopted Agile methodology report being

swamped by meetings; iterative methodologies do not have to have so

many meetings.

Beyond these general recommendations, which apply to any methodology a

team might choose, I have three specific practices that I have found

to be essential. These practices can be adopted in most methodologies

one might choose. The are, first, focusing first on work that addresses

areas of high uncertainty in the system; second, having shared

milestones for many team members or the project as a whole; and third,

explicitly managing reporting and communication.

Focus on uncertainty.

I have said earlier that focusing on areas of high uncertainty is a

useful heuristic for choosing what to work on. While this is partly a

matter for tasking decisions, not development methodology, choices in

the development methodology can make this approach more or less

effective.

There is a general way to look at the kinds of uncertainty discussed

in Section 21.6. Uncertainty in one artifact is

related to uncertainty in another. Consider an artifact B that derives

somehow from artifact A. When the content in A is uncertain but B has

been worked out, it is uncertain whether B will actually fit with A or

not. For example, if a component’s design is built before its

specification, then the design could end up being wrong and needing to

be redone. On the other hand, if decisions have been made in A so that

its uncertainty is low, then there it is uncertain whether B, which

derives from A, is feasible. A component’s specification might require

something impossible in that component’s design and implementation,

for example. Similar situations arise for artifacts that are supposed

to be consistent but do not derive from each other, such as the

designs of two components that are expected to work together. In

other words, it can be costly to make a decision in the absence of

understanding the consequences of that decision.

At the same time, decisions have to get made. Artifacts have to get

built and the system completed. The rationale for prioritizing work to

reduce uncertainty is that it reduces the greatest uncertainty first

and allows decisions to be made with more information than they might

otherwise be. This should, more times than not, result in better

decisions and thus less expensive rework later.

My approach to making progress while managing uncertainty is to work

iteratively, exploring forward and backward informally through a set

of dependent artifacts. This balances different kinds of uncertainty

by trying out a decision in one artifact (such as specification) and

thinking about its effects on a dependent artifact (such as design or

implementation), and feeding information back to adjust the decisions

so that implementations are feasible and components integrate down the

line.

I have used a collection of techniques to work this way, generally

with good success. I have used different names for different related

techniques: iterative sketching, integration-first development,

multi-horizon planning, and continuous integration and

verification. These techniques work together, and can be incorporated

into most development methodologies.

Iterative sketching responds to my own desire to wonder about

consequences. When working on a component, or a set of components, I

often wander through them, imagining how one part of one component

might work. I sometimes mentally step through several components to

follow how they react to some external event. All of this results in

semi-organized, unofficial notes about all the pieces: notes on

requirements and design approaches, on key implementation ideas, on

what might be used to verify that a component or collection of them

works. In process I come to understand what is needed and where the

uncertainties are. What kinds of safety or security needs does the

component have? What technologies could be used to implement it? The

act of sketching also moves back and forth among artifacts, so that I

can check on whether a specification or design approach is likely

feasible, or adjusting a specification when I find that it leads some

something unobtainable.

Sketching has two other benefits besides avoiding infeasible